|

Docker is revolutionizing system design by making applications easier to build, deploy, and manage across different computing environments. Docker has emerged as a pivotal tool for system design, offering unparalleled benefits in application portability, scalability, and consistency across diverse environments.

Docker in System Design Important Topics to Understand Docker for System Design

What is Docker?Docker is an open-source containerization platform by which you can pack your application and all its dependencies into a standardized unit called a container. Containers are light in weight which makes them portable and they are isolated from the underlying infrastructure and from each other container. You can run the docker image as a docker container in any machine where docker is installed without depending on the operating system.

Importance of Docker in System DesignDocker plays a crucial role in modern system design for several reasons:

- Portability: Docker containers can run consistently across different environments (development, testing, staging, production), ensuring that the application works the same way everywhere. This eliminates the “it works on my machine” problem.

- Scalability: Docker simplifies scaling applications. You can quickly spin up multiple containers to handle increased load and manage them using container orchestration tools like Kubernetes. This makes it easier to design systems that can scale horizontally.

- Continuous Integration and Continuous Deployment (CI/CD): Docker integrates seamlessly with CI/CD pipelines, enabling automated testing, building, and deployment of applications. This accelerates development cycles and improves software quality.

- Consistency: Docker ensures that applications run consistently across different environments by encapsulating all dependencies and configurations within the container. This reduces environment-specific bugs and streamlines the development process.

- Microservices Architecture: Docker is well-suited for microservices architecture, where an application is composed of small, independent services that can be developed, deployed, and scaled independently. Docker containers provide the ideal encapsulation for each microservice.

Key Components of DockerDocker consists of several key components that work together to facilitate containerized application development and deployment. Here are the main components:

- Docker Engine: The core part of Docker, it is responsible for creating and managing containers. It consists of:

- Docker Daemon (

dockerd): Runs on the host machine and is responsible for building, running, and managing Docker containers. - Docker CLI: A command-line interface that allows users to interact with the Docker Daemon via commands.

- REST API: Provides an interface that programs can use to communicate with the Docker Daemon.

- Docker Images: Read-only templates used to create containers. An image includes everything needed to run a piece of software, including the code, runtime, libraries, environment variables, and configuration files.

- Docker Containers: Instances of Docker images that run applications. Containers are isolated from each other and the host system, ensuring that they have their own environment.

- Dockerfile: A text file that contains a set of instructions for building a Docker image. It specifies the base image, application code, dependencies, environment variables, and commands to run.

- Docker Compose: A tool for defining and running multi-container Docker applications. It uses a YAML file (

docker-compose.yml) to configure the application’s services, networks, and volumes. - Docker Hub: A cloud-based registry service for storing and distributing Docker images. Users can upload their own images and access publicly available images.

- Docker Swarm: A native clustering and orchestration tool for Docker. It allows you to manage a cluster of Docker nodes as a single virtual system, facilitating container orchestration, scaling, and load balancing.

- Docker Network: Provides networking capabilities for Docker containers. Docker creates default networks that containers can connect to, and users can define their own networks to control how containers communicate with each other and with external services.

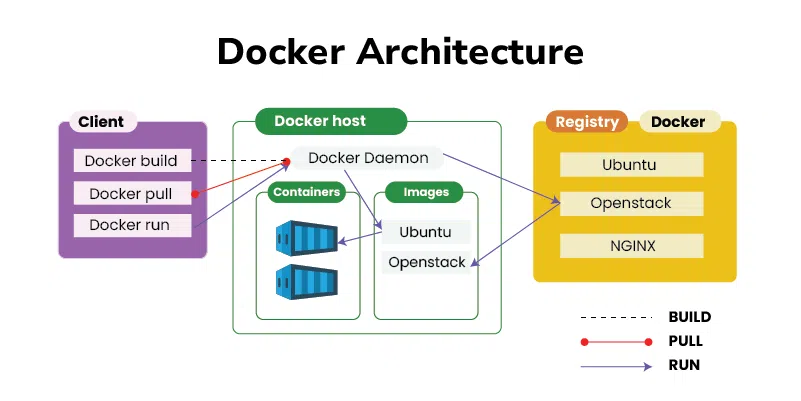

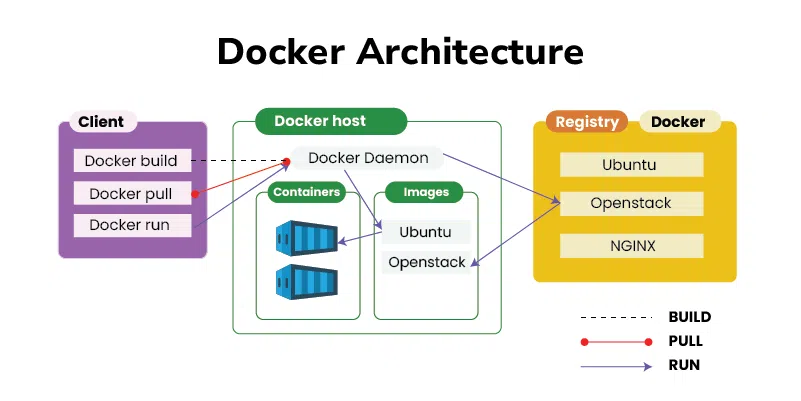

Docker ArchitectureDocker architecture is composed of several layers and components that work together to create, manage, and orchestrate containers. Below is an in-depth explanation of Docker’s architecture:

Docker Architecture 1. Docker EngineDocker Engine is the core component of Docker, responsible for building and running containers. It has three main parts:

- Docker Daemon (

dockerd): The background service running on the host machine. It listens for Docker API requests and manages Docker objects like images, containers, networks, and volumes. - Docker CLI: The command-line interface used by users to interact with Docker. The CLI communicates with the Docker Daemon using the Docker API.

- REST API: A set of HTTP endpoints that provide an interface for interacting with the Docker Daemon programmatically. This API is used by both the Docker CLI and other tools to control Docker.

2. Docker ObjectsDocker uses several key objects to build and run containerized applications:

- Images: Read-only templates used to create containers. Images are built from Dockerfiles and can be stored in Docker registries like Docker Hub.

- Containers: Instances of Docker images. Containers run applications and are isolated from the host system and other containers.

- Volumes: Persistent storage for Docker containers. Volumes can be shared between containers and are used to store data outside of the container’s filesystem.

- Networks: Allow containers to communicate with each other and with external systems. Docker supports different types of networks, including bridge networks, overlay networks, and host networks.

3. DockerfileA Dockerfile is a text file containing a series of instructions on how to build a Docker image. Each instruction in a Dockerfile creates a new layer in the image. Typical instructions include specifying a base image, copying application files, installing dependencies, and defining environment variables.

4. Docker ComposeDocker Compose is a tool for defining and running multi-container Docker applications. It uses a YAML file (docker-compose.yml) to configure the application’s services, networks, and volumes. Docker Compose simplifies the management of complex applications by allowing you to define and run multiple containers as a single service.

5. Docker SwarmDocker Swarm is Docker’s native clustering and orchestration tool. It transforms a group of Docker engines into a single virtual Docker engine. Swarm enables the deployment, scaling, and management of multi-container applications across a cluster of machines. Key features of Docker Swarm include:

- Node Management: Nodes are individual Docker engines participating in the Swarm cluster. There are two types of nodes: managers and workers. Managers handle the cluster management tasks, while workers execute the containers.

- Services: A service is the definition of how a container should behave in production. It includes information on which image to use, network settings, and scaling policies.

- Tasks: A task is a single container running in the Swarm. Tasks are distributed across nodes by the Swarm manager.

6. Docker NetworkingDocker provides several networking options to control how containers communicate:

- Bridge Network: The default network driver, allowing containers on the same host to communicate with each other.

- Host Network: Containers use the host’s network stack directly, offering improved performance at the expense of isolation.

- Overlay Network: Enables containers running on different Docker hosts to communicate, typically used in Swarm clusters.

- None Network: Completely disables networking for a container, useful for isolated workloads.

7. Docker StorageDocker provides different storage options to manage data:

- Volumes: Preferred method for persisting data, stored outside the container’s filesystem.

- Bind Mounts: Mounts a directory or file from the host into the container. Useful for sharing data between the host and the container.

- Tmpfs Mounts: Creates temporary storage that is only stored in the host’s memory and never written to the filesystem. Useful for sensitive data that does not need to persist.

8. Docker RegistryA Docker Registry is a storage and distribution system for Docker images. Docker Hub is the default public registry, but you can also set up private registries. Registries store image repositories, which consist of multiple image versions (tags).

Steps to Install and Run DockerThe following steps guide you in installation of docker on ubuntu:

Step 1: Remove old version of Docker- Execute the following command to remove the old versioned docker software:

Code Snippet

$ sudo apt-get remove docker docker-engine docker.io containerd runc

Step 2: Installing Docker Engine- The following command is used for installation of docker engine:

Code Snippet

$ sudo apt-get update

$ sudo apt-get install \

ca-certificates \

curl \

gnupg \

lsb-release

$ sudo mkdir -p /etc/apt/keyrings

$ curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

$ echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

$ sudo apt-get update

$ sudo apt-get install docker-ce docker-ce-cli containerd.io docker-compose-plugin

$ sudo groupadd docker

$ sudo usermod -aG docker $USER

Step 3: Verify Docker Installation- Check if docker is successfully installed in your system by trying to run a container with the following command:

Code Snippet

$ sudo docker run hello-world

Containerizing Application Using DockerThe following steps guides in containerizing the application using Docker:

Step 1: Create Dokcerfile and Python Application- Create a folder with 2 files (Dockerfile and main.py file) in it

Step 2: Develop Python Code- Edit main.py with the below code, or else you can try on developing your own python code.

Code Snippet

#!/usr/bin/env python3

print("Docker and GFG rock!")

Step 3: Develop a Dockerfile- Edit Dockerfile with the below instructions for developing the Dockerfile:

Code Snippet

FROM python:latest

COPY main.py /

CMD [ "python", "./main.py" ]

Step 4: Create a Docker Image- Once you have created and edited the main.py file and the Dockerfile, create your image contain your application by running the following command:

Code Snippet

$ sudo docker build -t python-test .

- The ‘-t’ option allows to define the name of your image. ‘python-test’ is the name we have chosen for the image.

Step 5: Run Docker Container- Once the image is created, your code is ready to launch.

Code Snippet

$ sudo docker run python-test

What are Docker Images?It is a file, comprised of multiple layers, used to execute code in a Docker container. They are a set of instructions used to create docker containers. Docker Image is an executable package of software that includes everything needed to run an application. This image informs how a container should instantiate, determining which software components will run and how. Docker Container is a virtual environment that bundles application code with all the dependencies required to run the application. The application runs quickly and reliably from one computing environment to another.

Building Docker ImagesThe following steps guides in containerizing the application using Docker:

Step 1: Create Dokcerfile and Python Application- Create a folder with 2 files (Dockerfile and main.py file) in it

Step 2: Develop Python Code- Edit main.py with the below code, or else you can try on developing your own python code.

Code Snippet

#!/usr/bin/env python3

print("Docker and GFG rock!")

Step 3: Develop a Dockerfile- Edit Dockerfile with the below instructions for developing the Dockerfile:

Code Snippet

FROM python:latest

COPY main.py /

CMD [ "python", "./main.py" ]

Step 4: Create a Docker Image- Once you have created and edited the main.py file and the Dockerfile, create your image contain your application by running the following command:

Code Snippet

$ sudo docker build -t python-test .

- The ‘-t’ option allows to define the name of your image. ‘python-test’ is the name we have chosen for the image.

Docker ContainersDocker container is a runtime instance of an image. Allows developers to package applications with all parts needed such as libraries and other dependencies.

- Docker Containers are runtime instances of Docker images. Containers contain the whole kit required for an application, so the application can be run in an isolated way.

- For eg.- Suppose there is an image of Ubuntu OS with NGINX SERVER when this image is run with the docker run command, then a container will be created and NGINX SERVER will be running on Ubuntu OS.

Use cases of Docker for System DesignDocker is highly beneficial in various system design scenarios due to its capabilities in providing lightweight, portable, and consistent runtime environments. Here are some common use cases of Docker in system design:

- Microservices Architecture

- Isolation and Independence: Each microservice runs in its own Docker container, allowing developers to isolate dependencies and manage service versions independently.

- Scalability: Containers can be easily scaled horizontally by running multiple instances, facilitating load balancing and high availability.

- Deployment Simplification: Docker simplifies the deployment of microservices by providing a consistent environment from development to production.

- Continuous Integration and Continuous Deployment (CI/CD)

- Automated Builds and Tests: Docker images can be built and tested automatically in CI/CD pipelines, ensuring that code changes are consistently tested in the same environment.

- Consistent Environments: Containers ensure that the application runs the same way in development, testing, and production environments, reducing “works on my machine” issues.

- Rollbacks and Rollouts: Docker makes it easier to roll back to previous versions or roll out new versions of applications by managing image tags.

- DevOps and Infrastructure as Code (IaC)

- Environment Replication: Docker allows developers to replicate production-like environments on their local machines, improving the development and debugging process.

- Configuration Management: Docker Compose and Docker Swarm provide tools for defining and running multi-container Docker applications, aiding in infrastructure management and deployment.

- Infrastructure Automation: Docker integrates well with orchestration tools like Kubernetes, enabling automated deployment, scaling, and management of containerized applications.

- Platform as a Service (PaaS)

- Self-Contained Environments: Docker containers encapsulate everything an application needs to run, including code, runtime, libraries, and system tools.

- Resource Efficiency: Containers share the host OS kernel, making them more resource-efficient compared to virtual machines.

- Portability: Applications packaged in Docker containers can run on any system with Docker installed, enhancing portability across different environments.

- Legacy Application Modernization

- Containerizing Legacy Applications: Docker can be used to containerize legacy applications, allowing them to run on modern infrastructure without code changes.

- Gradual Migration: Legacy applications can be gradually decomposed into microservices, running new and old components side-by-side using Docker.

- High Performance Computing (HPC)

- Resource Isolation: Docker containers provide isolation of resources, ensuring that HPC applications can run efficiently without interference.

- Easy Deployment: Complex HPC applications with numerous dependencies can be packaged into Docker containers, simplifying deployment across different environments.

Docker’s integration with cloud platforms is a crucial aspect of modern system design, providing scalable, portable, and efficient solutions for deploying applications in the cloud. Here’s a look at how Docker integrates with various cloud platforms:

- Amazon Web Services (AWS)

- Amazon Elastic Container Service (ECS): ECS is a highly scalable container orchestration service that supports Docker containers. It allows you to run, stop, and manage containers on a cluster of Amazon EC2 instances.

- Amazon Elastic Kubernetes Service (EKS): EKS is a managed Kubernetes service that makes it easy to run Kubernetes on AWS without needing to install and operate your own Kubernetes control plane.

- AWS Fargate: Fargate is a serverless compute engine for containers that works with both ECS and EKS. It allows you to run containers without managing the underlying infrastructure.

- Amazon ECR (Elastic Container Registry): ECR is a fully managed Docker container registry that makes it easy to store, manage, and deploy Docker container images.

- Microsoft Azure

- Azure Kubernetes Service (AKS): AKS is a managed Kubernetes service that simplifies deploying a managed Kubernetes cluster in Azure.

- Azure Container Instances (ACI): ACI allows you to run Docker containers directly on the Azure cloud without managing the underlying infrastructure.

- Azure Container Registry (ACR): ACR is a managed Docker registry service based on the open-source Docker Registry 2.0, allowing you to store and manage private Docker container images.

- Azure App Service: This service enables you to deploy Docker containers as web apps, providing a PaaS (Platform as a Service) environment.

- Google Cloud Platform (GCP)

- Google Kubernetes Engine (GKE): GKE is a managed Kubernetes service that provides a robust and scalable environment for deploying containerized applications.

- Google Cloud Run: Cloud Run allows you to run stateless HTTP containers that can automatically scale based on incoming traffic.

- Google Container Registry (GCR): GCR is a private container image registry that supports Docker images, providing a secure and scalable storage solution.

Common Challenges and SolutionsIntegrating Docker into your workflow and deploying Dockerized applications in the cloud can present several challenges. Here are some common challenges and their solutions:

- Container Orchestration

- Challenge: Managing a large number of containers manually can be complex and error-prone.

- Solution: Use container orchestration platforms like Kubernetes, Docker Swarm, or cloud-specific solutions (ECS, AKS, GKE) to automate deployment, scaling, and management of containerized applications.

- Networking

- Challenge: Networking in a containerized environment can be complex, especially when dealing with multi-host networking and service discovery.

- Solution:

- Use built-in networking solutions provided by orchestration platforms (e.g., Kubernetes Service, Ingress).

- Employ service meshes like Istio or Linkerd for advanced networking capabilities, including load balancing, traffic management, and secure communication between services.

- Storage Persistence

- Challenge: Containers are ephemeral, meaning data stored inside a container is lost when the container is removed.

- Solution:

- Use persistent storage solutions like Docker volumes or Kubernetes Persistent Volumes.

- Utilize cloud storage solutions (AWS EBS, Azure Disks, Google Persistent Disks) for persistent and scalable storage options.

- Security

- Challenge: Containers introduce new security risks, such as vulnerabilities in container images and misconfigurations.

- Solution:

- Regularly scan container images for vulnerabilities using tools like Clair, Trivy, or cloud-native solutions.

- Follow security best practices, such as running containers with the least privileges, using signed images, and applying network policies to restrict communication.

- Use security tools like Docker Bench for Security or Kubernetes Pod Security Policies to enforce security standards.

|