|

Unlike the traditional application architecture, inter-service communication is the focus of the most current microservices architecture. Some of the main enablers for this are service discovery and load balancing. Apparently, they are quite similar but actually have differences concerning the roles they play. This article will explore the basics of service discovery, the principles of load balancing, and guidelines to find out when to employ one or the other.

Important Topics for Service Discovery vs Load Balancing

What are Service Discovery and Load Balancing?

Service discovery can be defined as an act through which a service in the network establishes a way through which it connects to other services in the network. This is especially relevant in microservices, which are systems that decompose applications into a series of loosely coupled services, each running independently on its server. Service discovery is the act of keeping track of the list of services that are available, where those services are located on the network, and how the services can determine who is available and how to reach them.

- Client-side Service Discovery:

- The client is also responsible for dynamically identifying the locations of available service instances within the network and distributing loads over those locations.

- In this approach for instance the client consults a service registry (for example Netflix Eureka); the client gets a list of available instances and then chooses one from the list following a certain load balancing algorithm.

- This method requires the client to initiate service discovery as well as the load balancing mechanisms.

- Server-side Service Discovery:

- In this case, the client sends a request to a registry; the registry itself or in cooperation with a load balancer will send back an address of the requested service.

- The load balancer, for example Amazon AWS Elastic Load Balancer, then passes the request to the correct service instance.

- This method enhances the distribution of services since it focuses on a specific component to handle service discovery and load balancing.

Load balancing is the process of disseminating network traffic on the number of servers in the network. This makes certain that no single server suffers from over load demand which enhances reliability and performance. Load balancers help in managing traffic spikes, preventing server overloads, and ensuring high availability by rerouting traffic from failed servers to healthy ones.

There are several types of load balancers:

- Hardware Load Balancers: Hardware appliances designed for traffic distribution, which is necessary in large-scale organizations where high speed and stability of the network are essential. These include F5 and Citrix NetScaler.

- Software Load Balancers: Load balancers like Nginx or HAProxy or any other application or service that routes traffic like Envoy. These can be installed on normal web servers and are flexible and scalable.

- Cloud-based Load Balancers: Cloud services in the form of managed services from cloud providers such as Amazon Web Service Elastic Load Balancer or Google Cloud Load Balancer. These are relatively simple to configure and the load can be easily balanced and distributed as per the traffic.

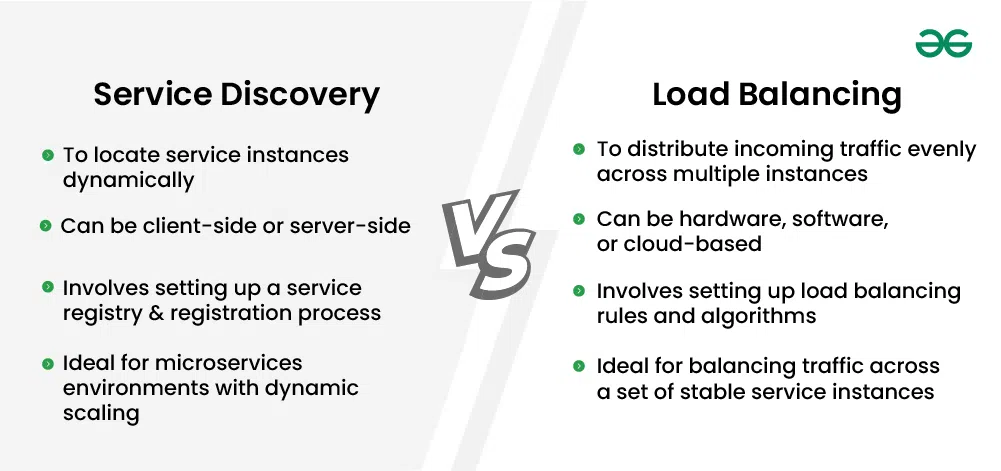

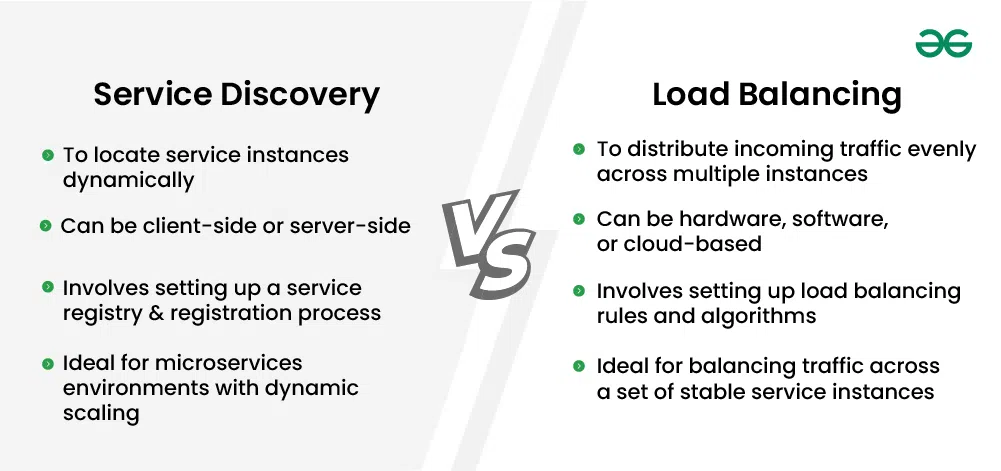

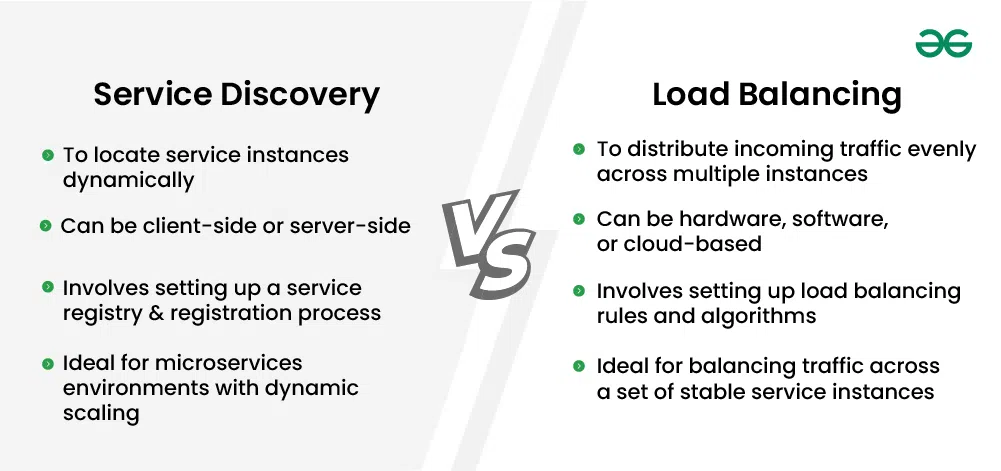

Differences Between Service Discovery and Load Balancing

Below are the differences between Service Discovery and Load Balancing:

|

Feature

|

Service Discovery

|

Load Balancing

|

|

Purpose

|

To locate service instances dynamically

|

To distribute incoming traffic evenly across multiple instances

|

|

Mechanism

|

Maintains a registry of service instances and their locations

|

Routes traffic based on load balancing algorithms

|

|

Implementation

|

Can be client-side or server-side

|

Can be hardware, software, or cloud-based

|

|

Dynamic Scaling

|

Automatically updates registry as services scale up/down

|

Distributes load among available instances, scaling as needed

|

|

Examples

|

Consul, Eureka, Etcd

|

Nginx, HAProxy, AWS ELB, Google Cloud Load Balancer

|

|

Configuration

|

Involves setting up a service registry and registration process

|

Involves setting up load balancing rules and algorithms

|

|

Direct Communication

|

Clients can directly communicate with service instances

|

Clients typically communicate through the load balancer

|

|

Failure Handling

|

Can detect and update registry with failing instances

|

Can route traffic away from failing instances

|

|

Use Case

|

Ideal for microservices environments with dynamic scaling

|

Ideal for balancing traffic across a set of stable service instances

|

When to Use Service Discovery?

Below is when to use service discovery:

- Dynamic Environments: It is advisable to apply service discovery when services go up and down in large numbers. For instance when pods (instances of services) in a Kubernetes cluster are created and destroyed dynamically. Service discovery makes it possible to registrar newly created instances, so that other services might be able to locate them.

- Microservices Architecture: Crucial for microservices where every service in the application can have parallel instances and the instances should be able to communicate with each other at runtime. Service discovery helps each microservice to discover the current instance of other microservices that it depends on.

- Decentralized Services: In case services are run across various geographical locations or data centers then service discovery assists in administering the network addresses effectively. It also enables services to discover the nearest or most suitable instance to reduce response time.

When to Use Load Balancing?

Below is when to use Load Balancing:

- High Traffic Applications: Load balancing for applications or services that have lots of traffic and to maintain a balance, instead of putting too much load on one server. They enable one to balance the flow of traffic and also to ensure that all the servers are dealing with reasonable traffic.

- Single Entry Point: It is very useful when you require clients to have a single point of contact while there are several backend services provided. This is especially useful for web applications where a load balancer serves as an application-layer reverse proxy that directs the request to the correct backend service.

- Failover and Redundancy: They are able to redistribute the traffic from unhealthy instances to the healthy ones, which will make the instances more reliable and available. It watches over the health of instances and makes sure that only healthy instances are served traffic.

Conclusion

Service discovery and load balancing are important in today’s environment where applications have been made distributed and those running as microservices but they have different functions. Service discovery is aimed at allowing services to locate the services that they need to interact with in a given environment, given that the environment is large and constantly changing. Load balancing, on the other hand, prevents traffic congestion on a particular instance and instead distributes the same amply across instances.

|