|

|

Widrow-Hoff Algorithm is developed by Bernard Widrow and his student Ted Hoff in the 1960s for minimizing the mean square error between a desired output and output produce by a linear predictor. The aim of the article is explore the fundamentals of the Widrow-Hoff algorithm and its impact on the evolution of learning algorithms. Table of Content

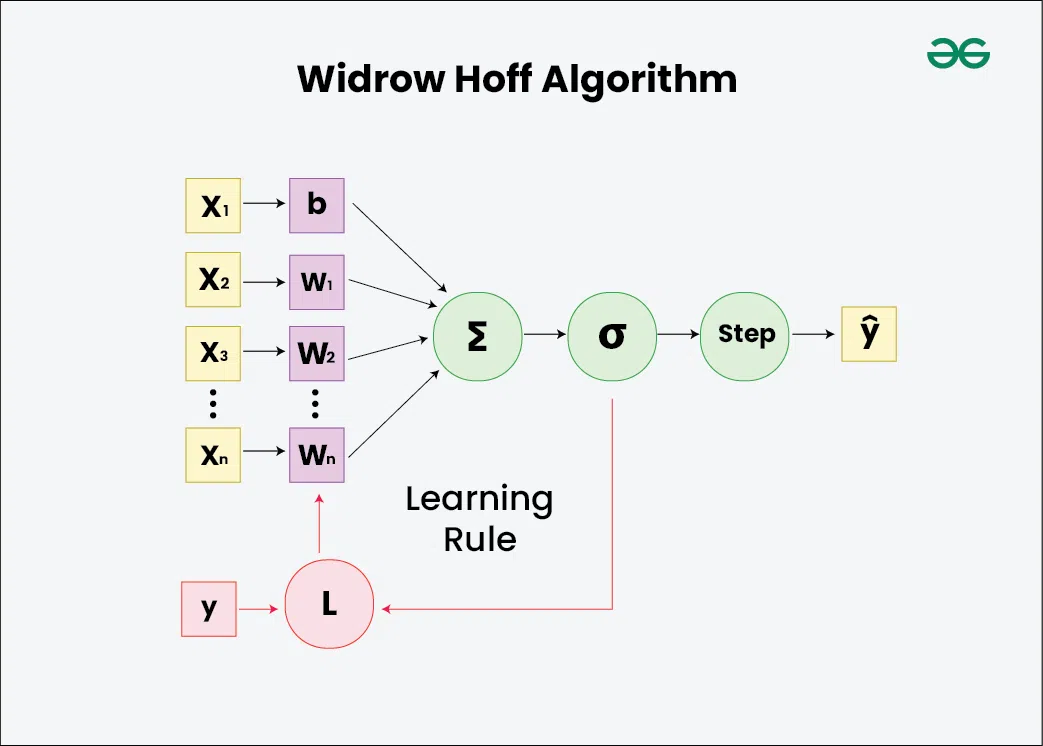

Understanding Widrow-Hoff AlgorithmWidrow-Hoff Learning Algorithm, known as the Least Mean Squares (LMS) algorithm, is used in machine learning, deep learning, and adaptive signal processing. The algorithm is primarily used for supervised learning where the system iteratively adjusts the parameters to approximate a desired target function. It operates by updating the weights of the linear predictor so that the predicted output converges to the actual output over time. Weight Update Rule in Widrow-Hoff RuleThe update rule guides how the weights of Adaptive Linear Neuron (ADALINE) are adjusted based on the error between expected output and observed output. The weight update rule in the Widrow-Hoff algorithm is given by: [Tex]w(t+1)=w(t)+η(d(t)−y(t))x(t)[/Tex] Here,

Interpretation

Working Principal of Widrow-Hoff AlgorithmThe key steps of the Widrow-Hoff algorithm are:

Implementing Widrow-Hoff Algorithm for Linear Regression ProblemWe will implement Widrow-Hoff (or LMS) learning algorithm using Python and NumPy to learn weights for a linear regression model, then apply it to synthetic data and print the true weights alongside the learned weights. We have followed these steps: Step 1: Define the Widrow-Hoff Learning AlgorithmThe # Define the Widrow-Hoff (LMS) learning algorithm def widrow_hoff_learning(X, y, learning_rate=0.01, epochs=100): num_features = X.shape[1] weights = np.random.randn(num_features) # Initialize weights randomly for _ in range(epochs): for i in range(X.shape[0]): prediction = np.dot(X[i], weights) error = y[i] - prediction weights += learning_rate * error * X[i] return weights Step 2: Generate Random DatasetRandom data is generated using NumPy. # Generate some synthetic training data np.random.seed(0) X = np.random.randn(100, 2) # 100 samples with 2 features true_weights = np.array([3, 5]) # True weights for generating y y = np.dot(X, true_weights) + np.random.randn(100) # Add noise to simulate real data Step 3: Add a Bias TermA bias term (constant feature) is added to the feature matrix # Add a bias term (constant feature) to X X = np.concatenate([X, np.ones((X.shape[0], 1))], axis=1) Step 4: Apply the Widrow-Hoff Algorithm to learn weights The # Apply the Widrow-Hoff algorithm to learn weights learned_weights = widrow_hoff_learning(X, y) Complete Implementation of Widrow-Hoff Algorithm for Linear Regression ProblemOutput: True Weights: [3 5] Learned Weights: [3.06124683 5.09292019 0.03074168] Applications of Widrow-Hoff Algorithm

|

Reffered: https://www.geeksforgeeks.org

| AI ML DS |

Type: | Geek |

Category: | Coding |

Sub Category: | Tutorial |

Uploaded by: | Admin |

Views: | 15 |