|

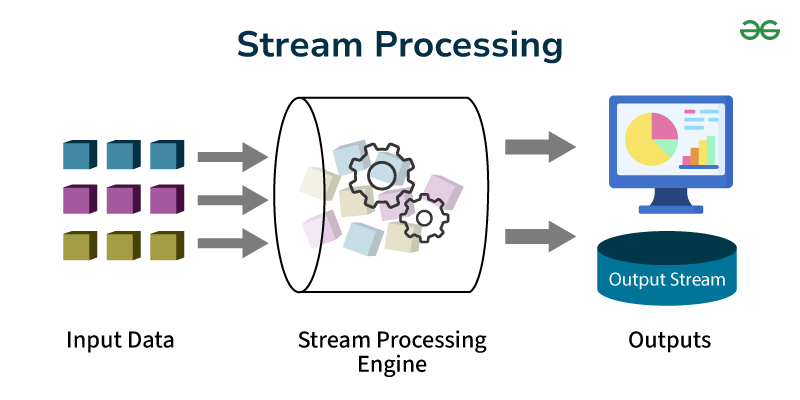

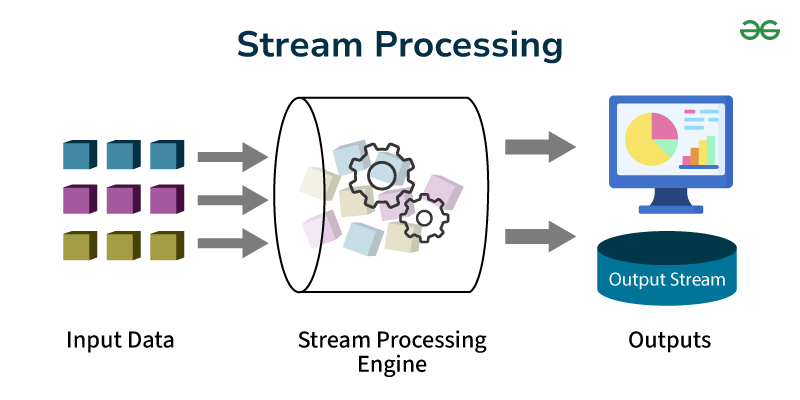

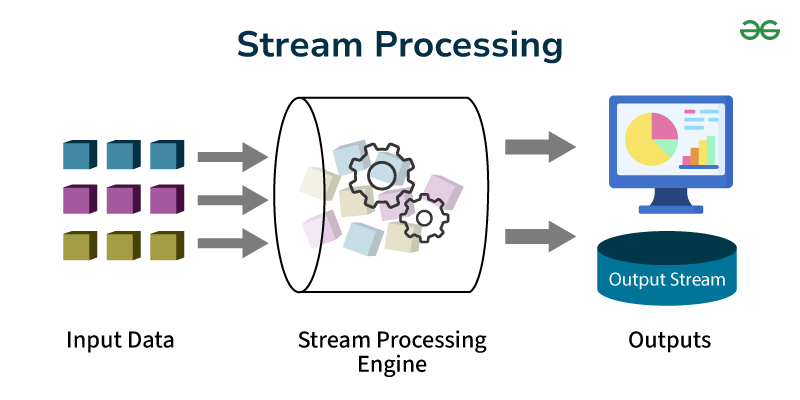

Stream processing is a technique that helps analyze and process large amounts of real-time data as it flows in from various sources. Stream processing involves processing data continuously as it is generated, Unlike traditional methods that handle data in batches, stream processing works with data as it arrives, making it possible to derive insights, trigger actions, and update systems instantaneously.

This technology is essential for applications that require real-time data analysis and immediate responses, in social media , real-time analysis of viewer behavior helps media companies personalize content recommendations, in financial trading, stream processing can analyze market data in real-time to execute trades instantly. In fraud detection, it can spot suspicious activities as they happen, preventing fraud before it causes damage.

What is Stream Processing?

Stream processing is a technique of data processing and management which uses a continuous data stream and analyzes, transforms, filter, or enhance it in real-time. Once processed, the data is sent to an application, data storage, or another stream processing engine. Stream processing engines enable timely decision-making and provide insights as data flows in, making them crucial for modern, data-driven applications. It is also known by several names, including real-time analytics, streaming analytics, Complex Event Processing, real-time streaming analytics, and event processing. Although various terminologies have previously differed, tools (frameworks) have converged under the term stream processing.

Real time Stream Processing How Does Stream Processing Work?

- Data Collection: Data is continuously collected from various sources like sensors, social media, or financial transactions.

- Data Ingestion: The incoming data is ingested into the stream processing engine without waiting to accumulate large batches.

- Real-Time Processing: Each piece of data is immediately analyzed and processed as it arrives.

- Data Transformation: The engine may transform the data, such as filtering, aggregating, or enriching it with additional information.

- Immediate Insights: The processed data provides real-time insights and results.

- Instant Actions: Based on the insights, the system can trigger instant actions or responses, ensuring timely decision-making.

- Output: The results are outputted to various destinations, such as dashboards, databases, or alert systems, for further use or analysis.

Stream Processing Architecture

There are several types of stream processing architectures, each designed to handle real-time data in different ways. Here are the main types:

Event Stream Processing (ESP):

- Definition: Event Stream Processing focuses on real-time processing and analysis of continuous streams of events or data records. It involves capturing, processing, and reacting to events as they occur, typically in milliseconds or seconds.

- Use Case: ESP is used for applications requiring immediate responses to events, such as real-time monitoring, fraud detection, and IoT data processing.

Message-Oriented Middleware (MOM):

- Definition: Message-Oriented Middleware facilitates communication between distributed systems by managing the exchange of messages. It ensures reliable delivery, messaging patterns (like publish-subscribe), and integration across heterogeneous systems.Use Case: MOM is essential for asynchronous communication and integration in applications like enterprise messaging, microservices architectures, and systems requiring decoupling of components.

Complex Event Processing (CEP)

- Definition: Complex Event Processing involves analyzing and correlating multiple streams of data to identify meaningful patterns or events. It focuses on detecting complex patterns within streams in real-time or near real-time.

- Use Case: CEP is used for applications requiring high-level event pattern detection, such as algorithmic trading, operational intelligence, and dynamic pricing in retail.

Data Stream Processing (DSP)

- Definition: Data Stream Processing involves processing and analyzing continuous data streams to derive insights and make decisions in real-time. It includes operations like filtering, aggregation, transformation, and enrichment of streaming data.Use Case: DSP is used in various applications, including real-time analytics, sensor data processing, financial market analysis, and monitoring systems.

Lambda Architecture for stream processing :

- Definition: Lambda Architecture is a hybrid approach combining batch and stream processing techniques to handle large-scale, fast-moving data. It uses both real-time stream processing and batch processing to provide accurate and timely insights.

- Use Case: Lambda Architecture is applied in systems requiring both real-time analytics and historical data analysis, such as social media analytics, recommendation engines, and IoT platforms.

Lambda Architecture for Stream Processing Kappa Architecture for stream processing:

- Definition: Kappa Architecture is an evolution of Lambda Architecture that simplifies the data pipeline by using only stream processing for both real-time and batch data processing. It emphasizes using stream processing platforms as the core for data processing.

- Use Case: Kappa Architecture is suitable for scenarios where simplicity, scalability, and unified processing of real-time and historical data are critical, such as real-time analytics, IoT data processing, and log analysis.

Kappa Architecture for Stream Processing Each of these architectures is designed to address specific needs and challenges in stream processing, allowing organizations to choose the best approach for their particular use cases and requirements.

When to Use Steam Processing?

Stream processing should be used when you need to analyze and respond to data in real-time. Stream processing is perfect for obtaining real-time analytics results. Streaming data processing systems in big data are effective solutions for scenarios that require minimal latency. Here are some specific scenarios:

- Real-Time Analytics: When you need immediate insights, such as monitoring live social media feeds or website user activity.

- Fraud Detection: To instantly detect and respond to suspicious activities in financial transactions or online services.

- IoT Data Management: For processing continuous data from sensors and devices in real-time, such as in smart homes or industrial automation.

- Live Monitoring: When tracking and reacting to events as they happen, like network security threats or system performance issues.

- Dynamic Pricing: In scenarios like e-commerce or ride-sharing, where prices need to adjust based on real-time demand and supply.

- Real-Time Recommendations: To provide users with immediate suggestions, such as in online shopping or streaming services.

Stream processing is particularly effective for algorithmic trading and stock market surveillance, computer system and network monitoring and wildlife tracking, geographic data processing, predictive maintenance, manufacturing line monitoring, and smart device applications.

What are the Stream Processing frameworks?

Stream processing frameworks are tools and libraries that help developers build applications that process data in real-time. Some popular stream processing frameworks include:

- Apache Kafka: A distributed stream processing platform that is used for building real-time data pipelines and streaming applications. It can handle large volumes of data with high throughput.

- Apache Flink: A powerful stream processing framework that provides low-latency, high-throughput data processing. It supports both batch and stream processing.

- Apache Storm: A real-time computation system designed for processing unbounded streams of data. It is known for its scalability and fault-tolerance.

- Apache Samza: Developed by LinkedIn, Samza is a stream processing framework that works with Apache Kafka to provide distributed stream processing.

- Google Cloud Dataflow: A fully managed service for stream and batch processing, based on Apache Beam. It allows developers to write processing pipelines and execute them on Google Cloud.

- Amazon Kinesis: A cloud-based service for real-time processing of streaming data. It enables the development of applications that process and analyze streaming data.

- Microsoft Azure Stream Analytics: Microsoft Azure Stream Analytics is a real-time analytics service that can process millions of events per second, providing insights from data streams in real-time.

- IBM Streams: A platform for processing large volumes of streaming data with low latency. It supports complex analytics and real-time processing.

Apache Storm provides real-time computation features such as online machine learning, reinforcement learning, and continuous computation. Delta Lake has a single architecture to handle both stream and batch processing.

History of Stream Processing

Computer scientists have explored many frameworks for analyzing and processing data since the evolution of computers. In the beginning, this was referred to as sensor fusion. In the early 1990s, Stanford University professor David Luckham invented the term “complex event processing” (CEP). This contributed to the development of service-oriented architectures (SOAs) and enterprise service buses (ESBs). The rise of cloud services and open-source software resulted in more cost-effective techniques for managing event data streams, such as publish-subscribe services based on Kafka.

Stream vs Batch processing

|

Stream processing

|

Batch processing

|

|

Batch processing processes of large amounts of data in a single batch over a set period

|

Stream processing processes of a continuous stream of data.

|

|

In stream processing, the data size is unknown and limitless in advance.

|

In batch processing, the data size is predictable and finite.

|

|

Stream processing analyzes data in real-time.

|

Batch processing processes a large amount of data all at once.

|

|

Stream processing takes a few seconds to process data.

|

Batch processing takes a longer time to process data.

|

Stream Processing in Action

- Internet of Things (IoT) edge analytics: Companies in manufacturing, oil and gas, transportation, and architecture utilize stream processing to manage data from billions of things.

- Real-time personalization, marketing, and advertising: Companies can deliver personalized, contextual consumer experiences through real-time processing streams.

Conclusion

Stream processing has become an essential technology for handling real-time data efficiently and effectively. By processing data as it streams in, businesses across various industries can perform immediate analysis and response to incoming data, stream processing helps organizations gain timely insights, improve decision-making, enhance operational efficiency and gain competitive advantages. With various architectures available, from micro-batch to continuous processing, businesses can choose the best approach to meet their specific needs.

Stream Processing – FAQs

What is the purpose of stream processing?

Stream processing is a data management technique that includes ingesting a continuous data stream to rapidly analyze, filter, transform, or enhance the data in real time.

What is the characteristic of stream processing?

Stream processing is most suitable for applications with three characteristics: compute intensity, the number of arithmetic operations per I/O, and global memory reference.

Is stream processing used for latency?

Stream processing has lower latency since data is processed as it enters the system, which makes it ideal for real-time analytics or jobs that require instantaneous insights.

What is event stream processing?

Event Stream Processing (ESP) uses a continuous stream of events to process them as soon as a change occurs.

|