|

|

Reinforcement learning using PyTorch enables dynamic adjustment of agent strategies, crucial for navigating complex environments and maximizing rewards. The article aims to demonstrate how PyTorch enables the iterative improvement of RL agents by balancing exploration and exploitation to maximize rewards. The article introduces PyTorch’s suitability for Reinforcement Learning (RL), emphasizing its dynamic computation graph and ease of implementation for training agents in environments like CartPole. Table of Content Reinforcement Learning with PyTorchReinforcement Learning (RL) is like teaching a child through rewards and punishments. In RL, an agent (like a robot or software) learns to perform tasks by trying to maximize some rewards it gets for its actions. PyTorch, a popular deep learning library, is a powerful tool for RL because of its flexibility, ease of use, and the ability to efficiently perform tensor computations, which are essential in RL algorithms. The magic of RL in PyTorch begins with its dynamic computation graph. Unlike other frameworks that build a static graph, PyTorch allows adjustments on-the-fly. This feature is a big deal for RL, where we often experiment with different strategies and tweak our models based on the agent’s performance in a simulated environment. PyTorch not only makes these experiments easier but also accelerates the learning process of agents through its optimized tensor operations and GPU acceleration. Key Concepts of Reinforcement Learning

PyTorch facilitates the implementation of these concepts through its intuitive syntax and extensive library of pre-built functions, making it an excellent choice for diving into the exciting world of reinforcement learning. Reinforcement Learning Algorithm for CartPole Balancing

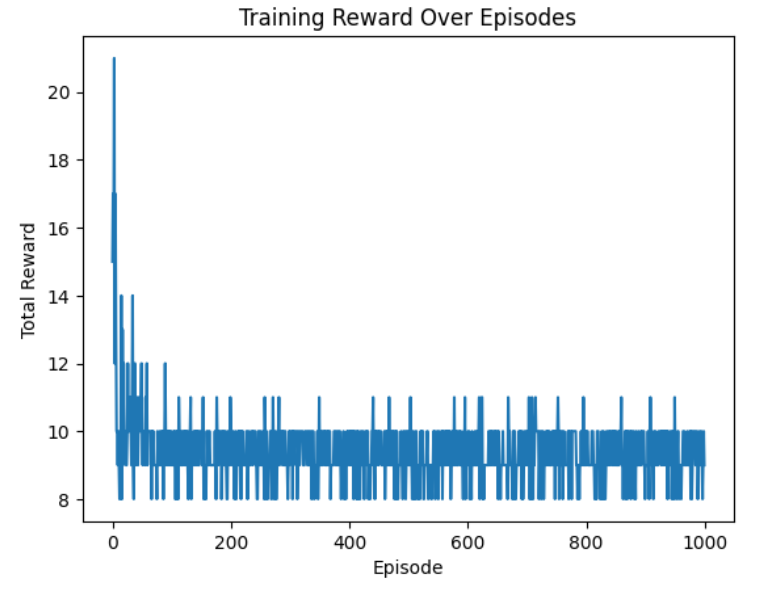

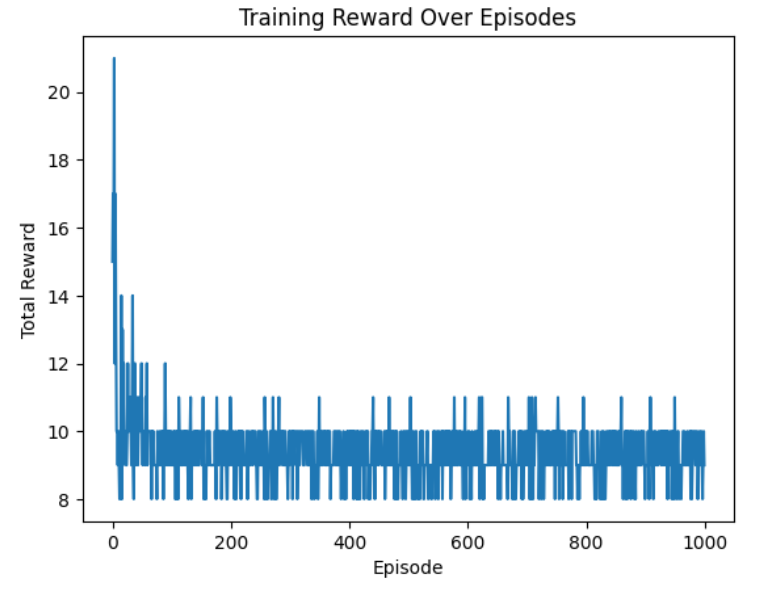

Implementing Reinforcement Learning using PyTorchUsing the CartPole environment from OpenAI’s Gym. This example demonstrates a basic policy gradient method to train an agent. Ensure you have PyTorch and Gym installed: pip install torch gymImport LibrariesThis code implements a simple policy gradient reinforcement learning algorithm using PyTorch, where an agent learns to balance a pole on a cart in the CartPole environment provided by the OpenAI Gym. Imports necessary libraries, including gym for the environment, torch for neural network and optimization, numpy for numerical operations, and matplotlib for plotting. Initialize Reward StorageA list to store the total reward for each episode, used later to visualize the learning curve. Define Policy NetworkThe Policy Network in this context is a neural network designed to map states (observations from the environment) to actions. It consists of two linear layers with ReLU activation in between and a final Softmax layer to produce a probability distribution over possible actions. Given a state as input, it outputs the probabilities of taking each action in that state. This probabilistic approach allows for exploration of the action space, as actions are sampled according to their probabilities, enabling the agent to learn which actions are most beneficial. The Policy Network is the agent’s “brain,” deciding how to act based on its current understanding of the environment, which it improves upon iteratively through training using rewards received from the environment. Calculate Discounted RewardsCalculates the discounted rewards for each time step in an episode, emphasizing the importance of immediate rewards over future rewards. Training LoopThe main function where the environment is interacted with, the policy network is trained using the rewards collected, and the optimizer updates the network’s parameters based on the policy gradient. Plotting the Learning CurveOutput: Episode 0, Total Reward: 15.0 After training, the total rewards per episode are plotted to visualize the learning progress.  Output graph Output explanation: The graph shows the total reward per episode for a reinforcement learning agent across 1,000 episodes. The reward starts high but decreases and stabilizes, indicating the agent may not be improving over time. ConclusionThis article explored using PyTorch for reinforcement learning, demonstrated through a practical example on the CartPole environment. Starting with simple interactions, the agent learned complex behaviors, such as balancing a pole, through trial and error, guided by rewards. The key takeaway is the power of reinforcement learning to solve problems by learning from actions’ outcomes rather than from direct instruction. The journey from initial failures to consistent success in achieving maximum rewards underscores the learning process’s dynamic and adaptive nature, highlighting reinforcement learning’s potential across various domains. Through this guide, we’ve seen how PyTorch facilitates building and training models for such tasks, offering an accessible pathway for exploring and applying reinforcement learning techniques. |

Reffered: https://www.geeksforgeeks.org

| AI ML DS |

Type: | Geek |

Category: | Coding |

Sub Category: | Tutorial |

Uploaded by: | Admin |

Views: | 13 |