|

|

Looking for the images can be quite a hassle don’t you think? Guess what? AI is here to make it much easier! Just imagine telling your computer what kind of picture you’re looking for and voila it generates it for you. That’s where Stable Diffusion, in Python, comes into play. It’s like magic – transforming words into visuals. In this article, we’ll explore how you can utilize Diffusion in Python to discover and craft stunning images. It’s, like having an artist right at your fingertips! What is Stable Diffusion?In 2022, the concept of Stable Diffusion, a model used for generating images from text, was introduced. This innovative approach utilizes diffusion techniques to create images based on textual descriptions. In addition to image generation, it can also be utilized for various tasks such as inpainting, outpainting, and generating image-to-image translations with the aid of a text prompt. How does it work?Diffusion models are a type of generative model that is trained to denoise an object, such as an image, to obtain a sample of interest. The model is trained to slightly denoise the image in each step until a sample is obtained. It first paints the image with random pixels and noise and tries to remove the noise by adjusting every step to give a final image that aligns with the prompt. Simplified Working

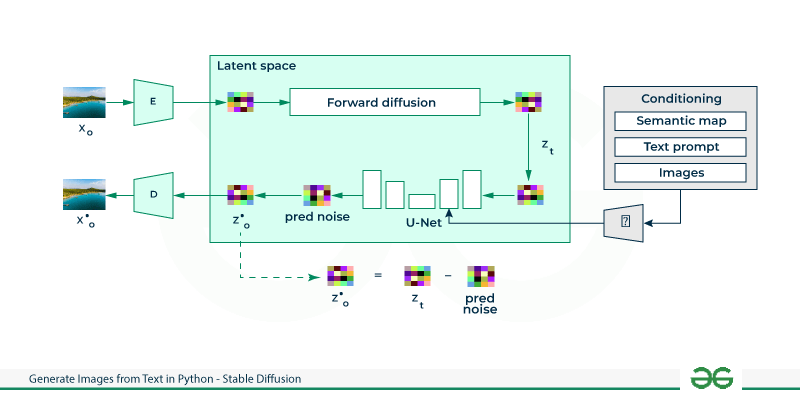

Architecture of Stable Diffusion

Stable Diffusion is founded on a diffusion model known as Latent Diffusion, which is recognized for its advanced abilities in image synthesis, specifically in tasks such as image painting, style transfer, and text-to-image generation. Unlike other diffusion models that focus solely on pixel manipulation, latent diffusion integrates cross-attention layers into its architecture. These layers enable the model to assimilate information from various sources, including text and other inputs. There are three main components in latent diffusion:

Autoencoder:An autoencoder is designed to learn a compressed version of the input image. A Variational Autoencoder consists of two main parts i.e. an encoder and a decoder. The encoder’s task is to compress the image into a latent form, and then the decoder uses this latent representation to recreate the original image. U-Net:U-Net is a kind of convolutional neural network (CNN) that’s used for cleaning up the latent representation of an image. It’s made up of a series of encoder-decoder blocks that step by step increase the image quality. The encoder part of the network reduces the image down to a lower resolution, and then the decoder works to bring this compressed image backup to its original, higher resolution and eliminate any noise in the process. Text EncoderThe job of the text encoder is to convert text prompts into a latent form. Typically, this is achieved using a transformer-based model, such as the Text Encoder from CLIP, which takes a series of input tokens and transforms them into a sequence of latent text embeddings. How to Generate Images from Text?The Stable Diffusion model is a huge framework that requires us to write very lengthy code to generate an image from a text prompt. However, HuggingFace has introduced Diffusers to overcome this challenge. With Diffusers, we can easily generate numerous images by simply writing a few lines of Python code, without the need to worry about the architecture behind it. In our case, we will be utilizing the cutting-edge StableDiffusionPipeline provided by the Diffusers library. This helps in generating an image from a text prompt with only a few lines of Python code. Requirements

pip install diffusers

pip install transformers

pip install Pillow

pip install accelerate scipy safetensors

Versions of DiffusionSome of the popular Stable Diffusion Text-to-Image model versions are:

The better version the slower inference time and great image quality and results to the given prompt. In this article, we will be using the stabilityai/stable-diffusion-2-1 model for generating images. stable-diffusion-2-1 model is fine-tuned from stable-diffusion-2. Stable Diffusion 2 is way better than Stable Diffusion 1 with improved image quality and is more realistic. Generating ImageHere is the Python code to run the model which generates the image as output. If you are using Google Colab, change the runtime to T4 which is GPU with a high amount of RAM. This code will generate a Pillow Image as output and is stored in the “image” variable that is accessed later.

Displaying the ImageIf you are running locally, use the following code to display the image.

Prompt: Photograph of a horse on a highway road at sunset. Output:

|

Reffered: https://www.geeksforgeeks.org

| AI ML DS |

Type: | Geek |

Category: | Coding |

Sub Category: | Tutorial |

Uploaded by: | Admin |

Views: | 13 |

-compressed-300.jpg)