|

|

the Spring Cloud Data Flow is an open-source architectural component, that uses other well-known Java-based technologies to create streaming and batch data processing pipelines. The definition of batch processing is the uninterrupted, interaction-free processing of a finite amount of data. Components of Spring Cloud Data Flow

Step-by-Step Implementation of Batch Processing with Spring Cloud Data FlowBelow are the steps to implement Batch Processing with Spring Cloud Data Flow. Step 1: Maven Dependencies Let’s add the necessary Maven dependencies first. Since this is a batch application, we must import the Spring Batch Project’s libraries: <dependency> Step 2: Add Main Class The @EnableTask and @EnableBatchProcessing annotations must be added to the main Spring Boot class in order to enable the necessary functionality. The annotation at the class level instructs Spring Cloud Task to perform a complete bootstrap. Java

Step 3: Configure a Job Let’s configure a job now, which is just a straightforward output of a String to a log file. Java

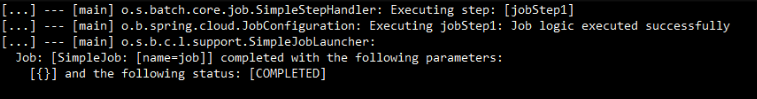

Step 4: Register the Application We require a unique name, an application type, and a URI that points to the app artefact in order to register the application with the App Registry. Enter the command at the prompt in the Spring Cloud Data Flow Shell: app register --name batch-job --type task Output:In the output, all the job does is print a string to a log file. The log files can be found in the directory that appears in the log output of the Data Flow Server.

This is Batch Processing with Spring Cloud Data Flow. |

Reffered: https://www.geeksforgeeks.org

| Advance Java |

Type: | Geek |

Category: | Coding |

Sub Category: | Tutorial |

Uploaded by: | Admin |

Views: | 12 |