|

|

Scaling a Kubernetes cluster is crucial to meet the developing needs of applications and ensure the finest performance. As your workload increases, scaling becomes vital to distribute the weight, enhance useful resource usage, and maintain high availability. This article will guide you through the terrific practices and techniques for scaling your Kubernetes cluster efficiently. We will discover horizontal and vertical scaling strategies, load balancing, pod scaling, monitoring, and other vital issues. Understanding Kubernetes Cluster ScalingThe scaling method automatically adjusts the pod assets based on utilization over the years, thereby minimizing aid waste and facilitating the most fulfilling cluster aid utilization. We will get a clear understanding of how to scale the cluster by referring to these steps.

Planning for Cluster ScalingPlanning for cluster scaling in DevOps entails designing and implementing strategies to address the boom of your application or infrastructure. Scaling is critical to make sure that your device can control expanded load, offer first-rate basic performance, and hold reliability. We can plan the cluster scaling as per the our requirements like traffic and resources consumption.

Scaling Up a Kubernetes ClusterScaling up a Kubernetes cluster in a DevOps environment entails including extra nodes to your cluster to deal with improved workloads or to improve general performance and availability. We can scale up the Kubernetes by following the below steps.

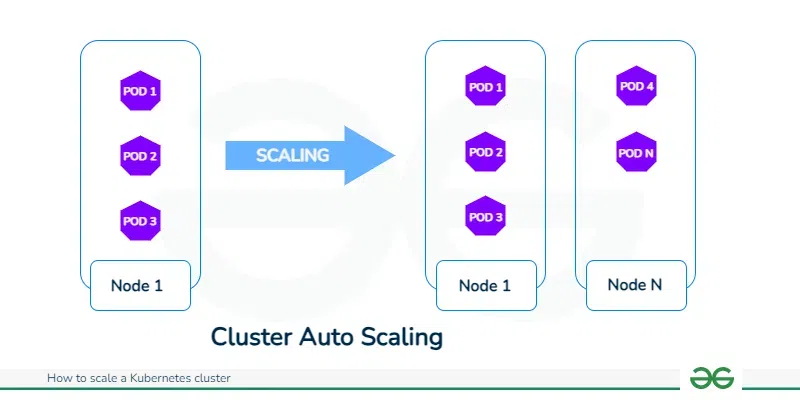

Scaling Out a Kubernetes ClusterScaling out a Kubernetes cluster entails including more worker nodes to the present cluster to deal with accelerated workloads and improve usual overall performance.

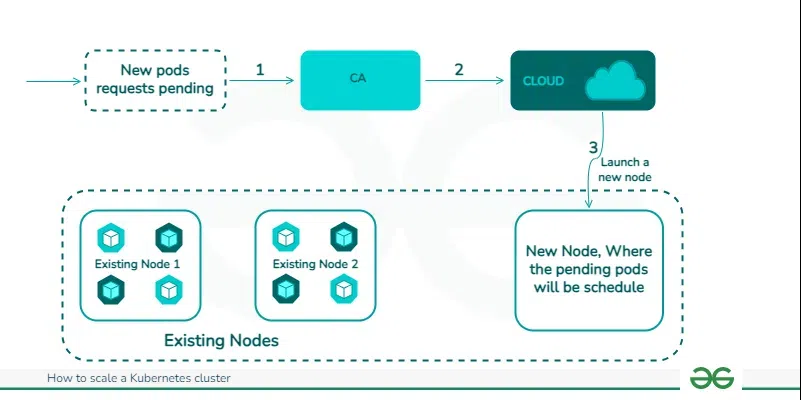

Automating Cluster ScalingAutomating cluster scaling in a DevOps surroundings is vital for efficaciously coping with infrastructure and responding to changing workloads. Automation reduces manual intervention, improves consistency, and complements the overall agility of your deployment.

Monitoring and OptimizationMonitoring and optimization play indispensable roles in a DevOps environment, making sure the reliability, overall performance, and performance of systems.

How Kubernetes Enables ScalingScaling is made available through Kubernetes, which automatically scales up or down in response to workload demand by dynamically assigning and managing resources. Its capacity for container orchestration allows effective workload distribution among nodes, optimizing the utilization of resources. Through capabilities like auto-scaling, Kubernetes additionally promotes horizontal scaling, guaranteeing that applications maintain their reactivity and resilience in the face of changing traffic loads. Types of Auto Scaling in KubernetesHorizontal Pod Autoscaler (HPA)In Kubernetes, its Horizontal Pod Autoscaler (HPA) automatically modifies pod replicas based on CPU or custom metrics. It keeps application accelerate and optimizes the use of resources by dynamically scaling pods. This makes sure that workload fluctuations are dealt with effectively, allowing pods to be scaled up or down depending on demand and save wasted resources. For more detailed information regrading the horizontal pod auto scaling refer this link. Vertical Pod Autoscaler (VPA)In Kubernetes, Vertical Pod Autoscaler (VPA) improves resource allocation to match the needs of the pod by continually modifying pod resource requests and limitations based on observed utilization of resources. Vertical resource scaling (VPA) improves the scalability of applications and ensures effective resource use. This allows the application to adjust to fluctuating workload needs and encourages overall performance and stability. Cluster/Multidimensional ScalingCluster or Multidimensional Scaling in Kubernetes is the process of dynamically modifying the cluster’s size and configuration in accordance with workload demands and resource availability. By automated cluster infrastructure management, performance and resource usage are optimized. Options for Manual Kubernetes ScalingHorizontal Scaling: Apply the kubectl scale command to manually alter the number of pod replicas in response to changing workload needs.

Vertical Scaling: For the purpose of to optimize resource allocation based on performance requires, each pod’s resource requests and limitations can be manually modified using the kubectl edit command.

Kubernetes Automatic Scaling with the Horizontal Pod Autoscaler (HPA) ObjectAn application in progress may manually modify the workload scalability by changing the replicas field in the workload manifest file. Although manual scaling is effective when circumstances when load spikes can be anticipated previously or when load varies steadily over an extended period of time, it is not the best way to deal with unexpected, unpredictable spikes in traffic. By configuring the metrics it will auto scale automatically based on the metric condition. Whenever Kubernetes’ Horizontal Pod Autoscaler option notices an increase in CPU or memory consumption (based on an established criterion), it can watch pods and automatically scale them. It can now solve the issue as a consequence. Horizontal pod autoscaling is the process of automatically expanding the number of pod replicas under controller control in accordance with demand. It is based on applying an established metric and being carried out by the Horizontal Pod Autoscaler Kubernetes resource. How Does HPA Work?Kubernetes’ Horizontal Pod Autoscaler (HPA) changes the number of pod replicas in line with usage of resource variables, such as CPU or custom metrics, so as to ensure optimal performance and utilization of resources. apiVersion: autoscaling/v2beta2 Best Practices for Scaling Kubernetes WorkloadsHere are the top three techniques for scaling the kuberenetes workloads.

Kubernetes Horizontal vs Vertical scalingWith Kubernetes, horizontal scaling expands the number of pod replicas to divide the burden, which makes it perfect for stateless applications that have different demand. Vertical scaling is appropriate for stateful applications that need more resources per instance because it changes the resources allocated to individual pods. For more detailed information refer this link. ConclusionThe method of scaling a Kubernetes cluster involves a mix of automatic and manual techniques, supported by robust monitoring and optimization methods. DevOps teams may guarantee that their Kubernetes clusters stay adaptable, reliable, and suitably-accepted to handle changing workloads by following to those quality instructions.. Scale a Kubernetes cluster – FAQsHow can I optimize expenses while scaling a Kubernetes cluster?

What is Cluster Autoscaling, and while need to it’s utilized in Kubernetes?

How many types of Kubernetes clusters are there?

Can Kubernetes scale down to zero?

Why is scaling important for a Kubernetes cluster?

|

Reffered: https://www.geeksforgeeks.org

| Dev Scripter |

Type: | Geek |

Category: | Coding |

Sub Category: | Tutorial |

Uploaded by: | Admin |

Views: | 14 |