|

|

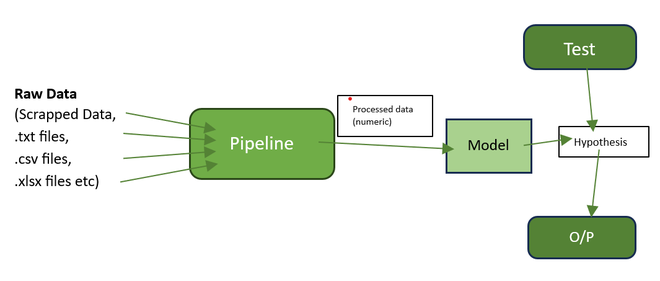

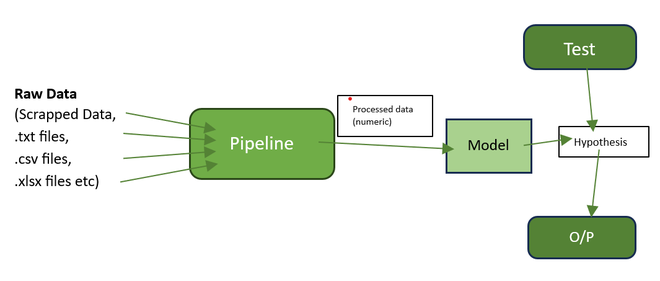

Data pipelining is essential for transforming raw text data into a numeric format suitable for analysis and model training in Natural Language Processing (NLP). This article outlines a comprehensive preprocessing pipeline, leveraging Python and the NLTK library, to convert textual data into a usable form for training and modeling. Data PipelineData Pipeline is the process of transforming the data from the initial one to other form passing it through various stages. In the case of textual data, such as a collection of words or language, data pipelining is essential. This is because we cannot directly apply our statistical formulae or train the model on raw text. Therefore, it becomes necessary to pre-process the text and convert it into a numeric form. This numeric representation is valuable for interpretation, analysis, and model training. Data analytics involves multiple stages, starting with data collection and followed by data processing. The data prepared for analysis, involving steps like cleaning, transformation, and feature extraction. Finally, the insights derived from the processed data are presented, supporting informed decision-making in Firms and Organizations.  Data Pipelining Structure Steps involves for creating pipeline to make data usable for training and modelling is listed below…

Build a Data Pipeline to ceovert text to Numeric VectorWe’ll start with installing necessary libraries using movie reviews dataset, an opensource dataset from kaggle. Dataset Link: train_reviews.csv Python3

Python

Output: review label Splitting the Data Python3

Output: Text reviews examples: Data Pipeline1. Data preprocessing & cleaningIn the initial stages of natural language processing, raw data undergoes preprocessing to prepare for subsequent analysis. This process includes steps such as tokenization of words and sentences as well as removal of stopwords from raw text. Stopwords are the commonly used words and are often removed from texts while Natural language processing. These words do not significantly contribute to meaning of sentence whether they exists or not. For processing our text, Bag-of-words model is generally used. In this model, sequence of words does not matter and focus on single word as a feature. Removing stopwords is crucial in this context, not only to enhance the efficiency of training model but also to give more importance to more meaningful words in the analysis. eg. stopwords might include words like “a”, “an”, “the”, “and”, “but”, “or”, “in”, “on”, “at”, “with”,………… NLTK `corpus.stopwords`corpora and`tokenize`module facilitate for tokenization and stopwords removing with ease. 2. Data LemmatizationLemmatization is crucial step in text processing that involves reducing words to their base or root form. This process simplifies variations in word forms, enhancing text analysis by grouping similar words together that helps to limit our feature length. Large unique words can cause potential issues like memory error, or time limit exceeding, these techniques helps us to minimize our columns or feature length. NLTK ModulesPython

Preprocessing CodePython3

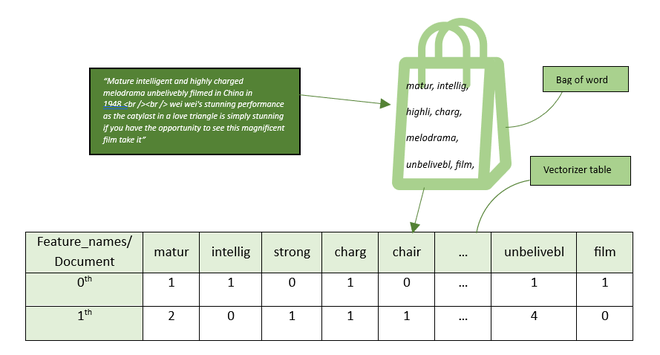

Output: mature intelligent highly charged melodrama unbelivebly filmed china 1948. wei wei 's stunning performance catylast love triangle simply stunning oppurunity see magnificent film take 3. Building Vocabulary and VectorizationBuilding a vocabulary refers to the process of selecting and retaining a limited set of meaningful unique words after preprocessing text data. In the context mentioned, our goal is to minimize our vocabulary size by extracting feature words, stemming them, tokenizing the text and removing stopwords. Each action perfomed to text is for minimizing our bag size rather than keeping all the words and maintaining their count per document it is good to keep only relevant and meaningful text.  Building Vocabulary & Vectorizaton Vectorization, is a Critical process in text processing that converts the words into numeric data so that it becomes easy to do mathematical operations over them. Since, many classifiers and model which rely on statistical computation understands only numeric data. Vectorizer table stores frequency of unique words per document. This numeric data table corresponds to texts data can be used in different ML model. bruteforcely, we can achieve the same goal through word-index mapping and word count frequency but this will be time consuming task and also inefficient. Fortunately, Scikit-learn offers `feature_extraction.text.CountVectorizer`module for streamlining the process in fast & efficient manner. Python3

Output: [[1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 1 1 2]]

This vectorized_train_x is resultant numeric table and is ready to be used for training model. Pipeline Function For Text to Numeric VectorPython

Output: [[1 0 0 ... 0 0 0] ConclusionData pipelining plays a crucial role in effective processing of text data within the field of Natural Language Processing (NLP). The complexity and variety of tasks in NLP, such as text classification, sentiment analysis, and named entity recognition facilitate a well structured pipeline. |

Reffered: https://www.geeksforgeeks.org

| AI ML DS |

| Related |

|---|

| |

| |

| |

| |

| |

Type: | Geek |

Category: | Coding |

Sub Category: | Tutorial |

Uploaded by: | Admin |

Views: | 13 |