|

|

In the modern days of machine learning, imbalanced datasets are like a curse that degrades the overall model performance in classification tasks. In this article, we will implement a Deep learning model using TensorFlow for classification on a highly imbalanced dataset. Classification on Imbalanced data using TensorflowWhat is Imbalanced Data?Most real-world datasets are imbalanced. A dataset can be called imbalanced when the distribution of classes of the target variable of the dataset is highly skewed i.e. the occurrence of one class (minority class) is significantly low from the majority class. This imbalanced dataset greatly reduces the model’s performance, degrades the learning process and induces biasing within the model’s prediction which leads to wrong and suboptimal predictions. In practical terms, consider a medical diagnosis model attempting to identify a rare disease—where positive cases are sparse compared to negatives. The skewed distribution can potentially compromise the model’s ability to generalize and make accurate predictions. So, it is very important to handle imbalanced datasets carefully to achieve optimal model performance. Step-by-Step Code ImplementationsImporting required librariesAt first, we will import all required Python libraries like NumPy, Pandas, Matplotlib, Seaborn, TensorFlow, SKlearn etc. Python3

Dataset loadingBefore loading the dataset, we will handle the randomness of the runtime resources using random seeding. After that, we will load a dataset. Then we will perform simple outlier removal so that our final results are not getting biased. Python3

Data pre-processing and splittingNow we will scale numerical columns of the dataset using MinMaxScaler and the target column will be encoded by Label encoder. Then the dataset will be divided into training and testing sets(80:20). Python3

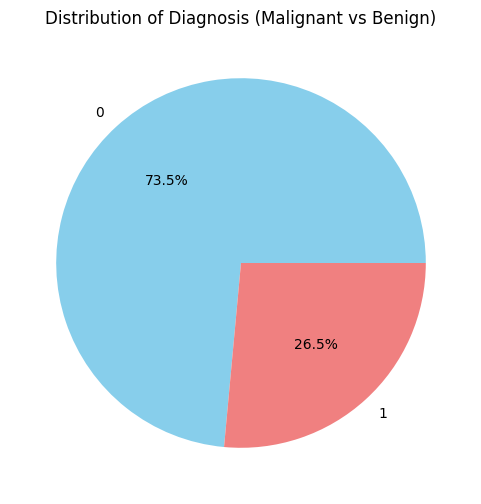

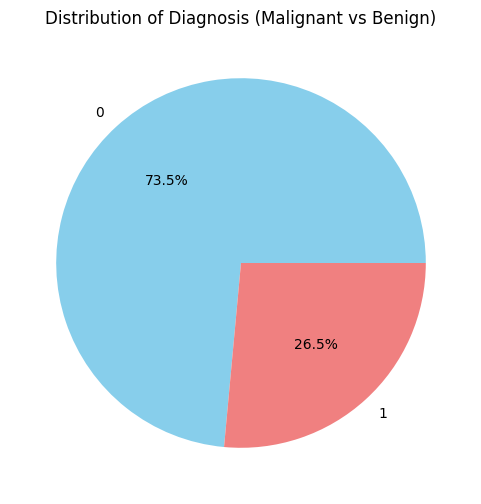

Check for imbalanceWe are performing classification of imbalance dataset. So, it required see how the target column is distributed. This Exploratory Data Analysis will help to under that imbalance factor of the dataset. Python3

Output:  Distribution of target column So, we can say that the dataset is highly imbalanced as there is a difference of approximately 3X of occurrences between ‘Malignant’ and ‘Benign’ classes. Now we can smoothly proceed with this dataset. Class weights calculationAs we are performing classification on imbalance dataset so it is required to manually calculate the class weights for both majority and minority classes. This class weights will be stored in a variable and directed feed to the model during training. Python3

Output: Weight for class 0: 0.68

Weight for class 1: 1.87Defining the Deep Leaning modelNow we will define our three-layered Deep Learning model and we will use loss as ‘binary_crossentropy’ which is used for binary classification and optimizer as ‘adam’. Python3

Output: Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 16) 496

dense_1 (Dense) (None, 8) 136

dense_2 (Dense) (None, 1) 9

=================================================================

Total params: 641 (2.50 KB)

Trainable params: 641 (2.50 KB)

Non-trainable params: 0 (0.00 Byte)

_________________________________________________________________Model trainingWe are all set up for model training. We will train the model on 80 epochs. However, it is suggested that more epochs will give better results. Also, here we need to pass a hyper-parameter called class_weight. This can be decided by the formula which is: Python3

Output: Epoch 1/80

11/11 [==============================] - 1s 25ms/step - loss: 0.6723 - accuracy: 0.6686 - val_loss: 0.6508 - val_accuracy: 0.7442

.......................................

.......................................

Epoch 80/80

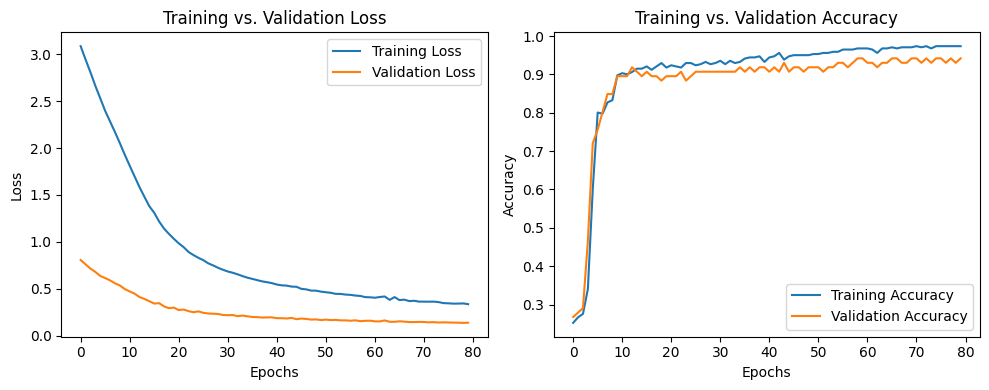

11/11 [==============================] - 0s 5ms/step - loss: 0.0729 - accuracy: 0.9677 - val_loss: 0.1415 - val_accuracy: 0.9419As the output is large so we are only giving the initial and last epoch for understanding. Visualizing the training processFor better understanding how our model is learning and progressing at each epoch, we will plot the loss vs. accuracy curve. Python3

Output:  Loss vs. Accuracy curve The above plot clearly depicts that more we go forward with epochs, our model will learn better. So, it is suggested that for better results go for more epochs. Setting best Bias factorTo evaluate our model, we need to set the bias factor. It is basically a value which indicates the borderline of majority and minority class outputs. Now we can set by assumption but it will be good if we set it by tuning. We will perform tuning based on best F1-score and use that value further. Python3

Output: 3/3 [==============================] - 0s 3ms/step

Best Bias: 0.4

Model EvaluationFor binary classification on imbalanced dataset Accuracy is not enough for evaluation. Besides Accuracy, we will evaluate our model in the terms of Precision, Recall and F1-Score. Here we need to set the bias. Python3

Output: precision recall f1-score support

0 0.97 0.92 0.94 64

1 0.80 0.91 0.85 22

accuracy 0.92 86

macro avg 0.88 0.92 0.90 86

weighted avg 0.92 0.92 0.92 86

Confusion matrixNow let us visualize the confusion matrix. It will help us to analyze the predictions with actual. Python3

Output:

ConclusionWe can conclude that classification on imbalanced dataset is very crucial task as all real-world datasets are imbalanced in nature. Our model achieved notable 94% of accuracy and high F1-Score of 88% which denotes that the model is performing well. |

Reffered: https://www.geeksforgeeks.org

| AI ML DS |

Type: | Geek |

Category: | Coding |

Sub Category: | Tutorial |

Uploaded by: | Admin |

Views: | 16 |

.png)