|

|

In this article, we will learn about hidden layer perceptron. A hidden layer perceptron is nothing but a hi-fi terminology for a neural network with one or more hidden layers. The purpose which is being served by these hidden layers is that they help to learn complex and non-linear functions for a task.  Hidden Layer Perceptron in TensorFlow The above image is the simplest representation of the hidden layer perceptron with a single hidden layer. Here we can see that the input for the final layer is the neurons of the hidden layers. So, in a hidden layer perceptron network input for the current layer is the output of the previous layer. We will try to understand how one can implement a Hidden layer perceptron network using TensorFlow. Also, the data used for this purpose is the famous Facial Recognition dataset. Importing Libraries and Dataset

Python3

Now let’s create a data frame of the image path and the classes from which they belong. Creating a data frame helps us to analyze the distribution of the data across various classes. Python3

Output: 28821 Python3

Output:

Python3

Output:

Python3

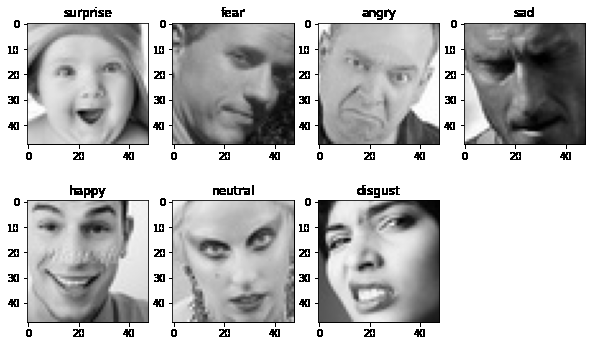

Output:  Bar Chart to visualize number of images in each class Data VisualizationHere we can certainly say that this dataset is not balanced but in this article, our main motive is to learn what a hidden layer perceptron is and how can we use it. Python3

Output:  Sample image from each class Python3

Now let’s convert the image list as a NumPy array and convert the labels as one-hot encoded vectors from the 7 classes. Python3

Output: ((28821, 2304), (28821, 7)) Now to evaluate the performance of the model as the training goes on we need to split the whole data into training as well as the training data. Python3

Output: ((27379, 2304), (1442, 2304)) Python3

Model ArchitectureWe will implement a Sequential model which will contain the following parts:

Now we will be implementing a neural network with two hidden layers with 256 neurons each. These hidden layers are nothing but hidden layer perceptrons. Python3

While compiling a model we provide these three essential parameters:

Let’s print the summary of our hidden layer perceptron model to understand the number of parameters present. Python3

Output: Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 256) 590080

batch_normalization (BatchN (None, 256) 1024

ormalization)

dense_1 (Dense) (None, 256) 65792

dropout (Dropout) (None, 256) 0

batch_normalization_1 (Batc (None, 256) 1024

hNormalization)

dense_2 (Dense) (None, 7) 1799

=================================================================

Total params: 659,719

Trainable params: 658,695

Non-trainable params: 1,024

_________________________________________________________________

Model Training and EvaluationNow we are ready to train our model. Python3

Output: Epoch 1/5 428/428 [==============================] - 5s 8ms/step - loss: 1.8563 - auc: 0.6886 - val_loss: 1.6245 - val_auc: 0.7530 Epoch 2/5 428/428 [==============================] - 3s 7ms/step - loss: 1.6319 - auc: 0.7554 - val_loss: 1.5624 - val_auc: 0.7769 Epoch 3/5 428/428 [==============================] - 4s 8ms/step - loss: 1.5399 - auc: 0.7845 - val_loss: 1.5510 - val_auc: 0.7814 Epoch 4/5 428/428 [==============================] - 5s 11ms/step - loss: 1.4883 - auc: 0.7999 - val_loss: 1.5106 - val_auc: 0.7929 Epoch 5/5 428/428 [==============================] - 3s 8ms/step - loss: 1.4408 - auc: 0.8146 - val_loss: 1.4992 - val_auc: 0.7971 By using this neural network with two hidden layers we have achieved a 0.8 AUC-ROC score which implies that the predictions made will be around 80% accurate. Python3

Output: Validation loss : 1.4992401599884033 Validation Accuracy : 0.7971429824829102 |

Reffered: https://www.geeksforgeeks.org

| AI ML DS |

Type: | Geek |

Category: | Coding |

Sub Category: | Tutorial |

Uploaded by: | Admin |

Views: | 12 |