|

|

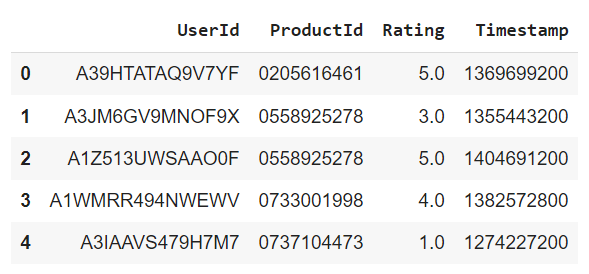

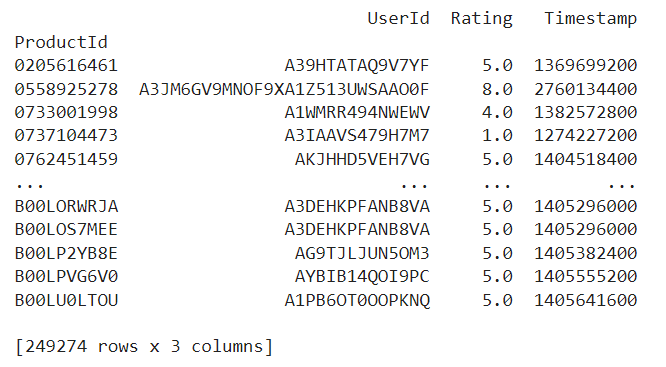

Pandas is a powerful library in Python that is mainly used for data manipulation and analysis. Pandas Data Frame is essentially a 2-D, mutable, and heterogeneous tabular data structure. It’s very similar to spreadsheets or SQL tables, but these can be handled with the help of Python programming language. In this article, we will explore the limits of Python Pandas DataFrames, discuss performance considerations, and provide example code to illustrate these concepts. Factors Affecting DataFrame PerformanceIn general, the number of rows a Pandas DataFrame can handle effectively depends on several factors. They are:

Practical LimitsActually there is no hard limit on a number of rows a pandas DataFrame can handle but the limit can be affected by the factors discussed above. But on typical modern data computers with 8-16 GB RAM, we can easily handle dataframes with up to several million rows. Let us categorize dataframes based on the number of rows they contain and their performance metrics

Optimization TechniquesNow let us see a few different methods to optimize Row handling in Python Pandas Dataframe with help of code examples. Use of Efficient Data TypesWe need to convert columns to more efficient data types. For example, we can use ‘category‘ as data type for columns with limited number of unique values etc. Here, we defined a dictionary of a sample data and created its dataframe using Pandas DataFrame() function. Then using a for loop, iterating over each column we calculated the number of unique values and total number of values. If the ratio of unique values to total values is less than 0.5, it converts the column to the ‘category’ data type, which is more memory-efficient for columns with a relatively small number of unique values compared to the total number of values. Output:  Chunk ProcessingWe can load and process data in chunks rather than loading all the rows of a dataset at once. To do this we have parameter named chunksize which can be used while loading data from files containing 1 million+ rows as follows Link to large dataset I considered in the following example:- Get the Dataset Here. In this example, we first defined the chunk size and an empty chunks list to store the processed chunks. Then read a large CSV file in chunks of 100,000 rows using the Output:  Usage of Parallel Computing library (Dask)An alternative approach is to use parallel computing libraries like dask which can easily integrate with pandas and allows us to work with larger datasets which cannot be fir in out physical memory at a time. In this example, we first defined the chunk size and an empty chunks list to store the processed chunks. Then read a large CSV file in chunks of 100,000 rows using the Output:  |

Reffered: https://www.geeksforgeeks.org

| Python |

Type: | Geek |

Category: | Coding |

Sub Category: | Tutorial |

Uploaded by: | Admin |

Views: | 21 |