|

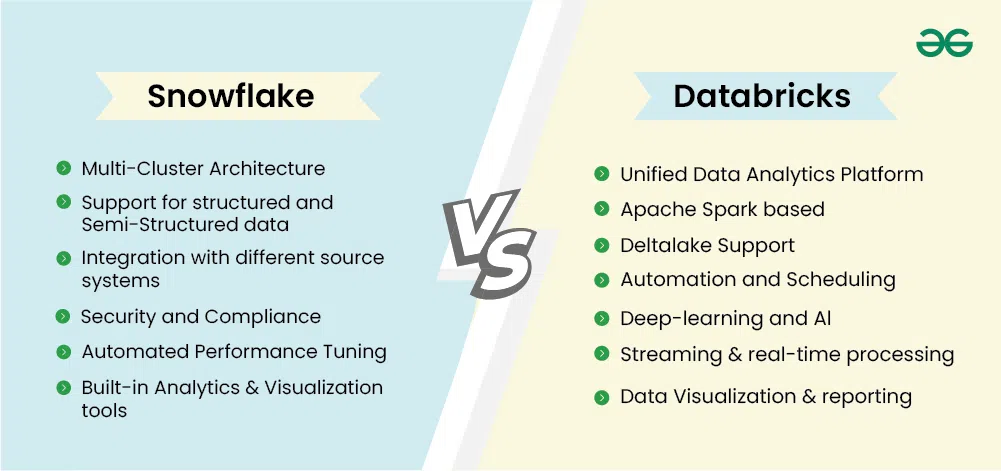

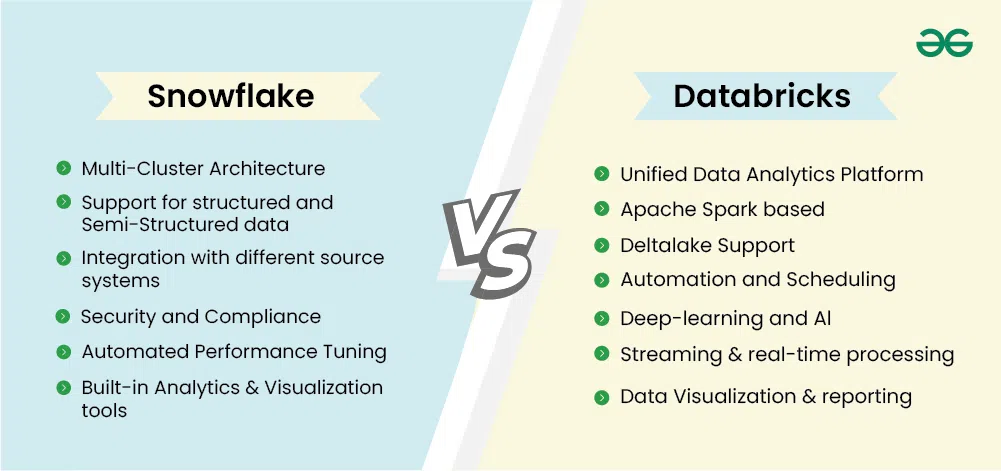

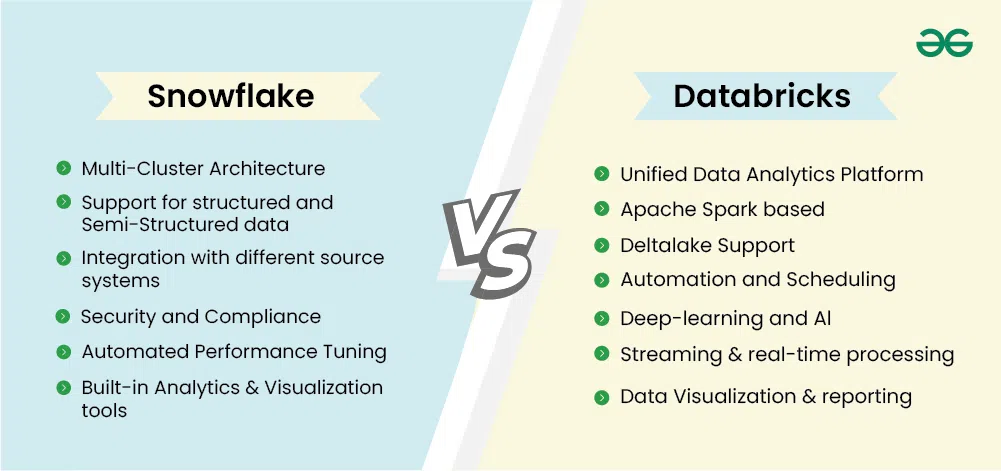

The rapid growth of data in various industries has led to the development of advanced cloud data platforms designed to handle the complexities of data management, processing, and analysis. Two prominent players in this space are Databricks and Snowflake, each offering unique strengths and capabilities. This article delves into the differences between Databricks and Snowflake, exploring their architectures, performance, scalability, cost, integration, and ecosystem to help data professionals make informed decisions about the right tool for their specific needs.

Difference between Snowflake and Databricks This article delves into the technical differences between Snowflake and Databricks, providing a comprehensive comparison to help you make an informed decision.

Overview of Snowflake and Databricks

What is Snowflake?

Snowflake is a cloud-based data warehousing solution that provides a fully managed service with a focus on simplicity and performance. It is designed to handle structured and semi-structured data, offering robust SQL-based analytics capabilities.

Snowflake’s data platform is not based on existing database technologies or “big data” software platforms like Hadoop. Instead, Snowflake blends a whole new SQL query engine with an innovative cloud-native architecture. Snowflake offers the customer all of the capabilities of an enterprise analytic database, as well as other extra features and capabilities.

Example of Snowflake:

import snowflake.connector

# Establish the connection

conn = snowflake.connector.connect(

user='your_username',

password='your_password',

account='your_account_identifier'

)

# Create a cursor object

cur = conn.cursor()

What is Databricks?

Databricks, on the other hand, is a unified data analytics platform built on Apache Spark. It combines the functionalities of data lakes and data warehouses, making it suitable for a wide range of data types and analytics workloads, including machine learning and real-time data processing.

Databricks is a widely used open analytics platform for developing, implementing, sharing, and managing enterprise-grade data, analytics, and AI applications at scale.

Example of Databricks:

# Import necessary libraries

from pyspark.sql import SparkSession

# Create a Spark session

spark = SparkSession.builder.appName("DatabricksExample").getOrCreate()

Architectural Differences of Snowflake and Databricks

Snowflake Architecture

Snowflake employs a unique hybrid architecture that separates storage and compute layers. This design allows for independent scaling of resources, optimizing performance and cost-efficiency. Snowflake’s architecture includes:

- Storage Layer: Stores data in a compressed, columnar format.

- Compute Layer: Consists of virtual warehouses that process queries in parallel.

- Cloud Services Layer: Manages authentication, infrastructure management, and query optimization.

Databricks Architecture

Databricks is built on Apache Spark and implements a unified data lakehouse architecture. This architecture combines the best features of data lakes and data warehouses, providing a single platform for all data types and analytics workloads. Key components include:

- Delta Lake: Ensures data reliability and performance with ACID transactions.

- Compute Layer: Manages Spark clusters for distributed data processing.

- Data Management: Includes features like Unity Catalog for data governance and MLFlow for machine learning lifecycle management.

Key Difference Between Databricks vs Snowflake

| Category |

Databricks |

Snowflake |

| Service Model |

Platform-as-a-Service (PaaS), provides a managed platform for data engineering, data science, and machine learning. |

Software-as-a-Service (SaaS), operates as a fully managed service, abstracting much of the underlying infrastructure from the user. |

| Cloud Platform Support |

AWS, Azure, Google Cloud, supports major cloud providers, ensuring flexibility and scalability across different environments. |

AWS, Azure, Google Cloud, supports major cloud providers, ensuring flexibility and scalability across different environments. |

| Data Structures |

All data types (raw, audio, video, logs, text), supports all data types, including raw, audio, video, logs, and text. |

Structured and semi-structured data, optimized for structured and semi-structured data. |

| Scalability |

Fine-grained control over cluster scaling, provides fine-grained control over cluster scaling, allowing users to customize resource management based on their specific needs. |

Instant scaling of storage and compute resources, instantly scales storage and compute resources independently, making it easier to handle sudden changes in workload. |

| User-Friendliness |

Steeper learning curve, has a steeper learning curve due to its extensive feature set and need for more technical expertise. |

Easy to adopt, especially for SQL users, known for its ease of use and quick adoption, especially for users familiar with SQL. |

| Security Features |

Role-based access control, data encryption, network security, includes role-based access control, data encryption, and network security to ensure secure data processing and storage. |

End-to-end encryption, compliance with industry standards, provides end-to-end encryption for data at rest and in transit and meets various industry standards. |

| Query Interface |

SQL, Spark Dataframe, Koalas, supports multiple query interfaces, including SQL, Spark Dataframe, and Koalas. |

SQL, uses SQL for data sharing and data cloning. |

| Cost |

Lower storage costs, less time to manage, offers lower storage costs and requires less time to manage, resulting in lower operational costs. |

Higher storage costs, longer administration time, has higher storage costs and requires longer administration time, resulting in higher operational costs. |

| Integration |

Supports multiple programming languages, supports multiple programming languages, making it easier to integrate with existing workflows. |

Requires third-party integrations for some features, requires third-party integrations for some features, which can add complexity and cost. |

| Data Governance |

Unified governance across all workloads, provides unified governance across all workloads, making it easier to manage data access and security. |

Separate data governance tools required, requires separate data governance tools, which can add complexity and overhead. |

| ETL |

Native support for ETL, has native support for ETL, making it easier to manage data pipelines. |

Requires additional tools for ETL, requires additional tools for ETL, which can add complexity and cost. |

| Machine Learning |

Built-in and unified tool for ML, has a built-in and unified tool for machine learning, making it easier to develop and deploy ML models. |

Only available via third-party integrations, only supports machine learning via third-party integrations, which can add complexity and cost. |

| Data Ingestion |

Autoloader, native integrations, offers autoloader and native integrations for data ingestion, making it easier to ingest data from various sources. |

Traditional COPY INTO, Snowpipe, uses traditional COPY INTO and Snowpipe for data ingestion, which can be more complex and time-consuming. |

| Data Applications |

Supports data applications, supports data applications, making it easier to develop and deploy data-driven applications. |

Limited support for data applications, has limited support for data applications, requiring additional tools and integrations. |

Storage Mechanisms

- Snowflake employs a columnar storage format, where data is organized by columns rather than rows. This format excels for analytical workloads as it reduces storage footprints and improves query performance on specific columns.

- Databricks offers more flexibility, supporting various storage options like Delta Lake, a lakehouse architecture that combines data lake storage with transactional data capabilities of a data warehouse. Delta Lake allows storing structured, semi-structured, and unstructured data within a unified platform.

Data Processing and Analytics

- Snowflake: Snowflake is primarily focused on SQL-based data warehousing and analytics. It provides a robust SQL engine optimized for querying large datasets. However, for complex data transformations and machine learning tasks, additional tools might be needed, increasing integration complexity.

- Databricks leverages the Apache Spark framework, offering a powerful engine for large-scale data processing and complex transformations. Spark’s in-memory processing capabilities enable faster data manipulation compared to traditional SQL-based approaches. Additionally, Databricks integrates seamlessly with machine learning libraries like TensorFlow and PyTorch, facilitating advanced data science workflows.

Machine Learning Capabilities in Databricks and Snowflake

Databricks provides a machine-learning ecosystem for developing various models. It also supports development in a variety of programming languages.

Snowflake does not have any ML libraries, however, it does provide connectors to link several ML tools. It also provides access to its storage layer and allows you to export query results for model training and testing.

Snowflake supports machine learning and analytics, however, it requires integration with other solutions and does not provide such services out of the box. Instead, it includes drivers for integrating with other platform libraries or modules and accessing data.

Snowflake excels in:

- Data Warehousing: Ideal for storing and analyzing large, structured datasets for business intelligence and reporting.

- Scalability: Easy to scale compute resources up or down to meet fluctuating workloads.

- Ease of Use: User-friendly interface and familiar SQL language lower the barrier to entry for data analysts.

Databricks shines in:

- Data Engineering: Powerful data processing capabilities handle complex transformations and data pipelines.

- Machine Learning: Seamless integration with popular ML libraries facilitates advanced data science workflows.

- Flexibility: Supports various storage options and cluster configurations for diverse workloads.

In some cases, a hybrid approach might be optimal. Organizations can leverage Snowflake for data warehousing and core analytics, while utilizing Databricks for complex data engineering and machine learning tasks.

Conclusion

Snowflake and Databricks are both powerful platforms, each with its strengths and ideal use cases. Snowflake excels in simplicity, performance, and ease of use for SQL-based analytics and data warehousing. Databricks, with its unified data lakehouse architecture, offers greater versatility and customization for data engineering, data science, and machine learning workloads.

|