|

|

Deploying machine learning (ML) models into production environments is crucial for making their predictive capabilities accessible to users or other systems. This guide provides an in-depth look at the essential steps, strategies, and best practices for ML model deployment.  Machine learning deployment Importance of Model DeploymentDeployment transforms theoretical models into practical tools that can generate insights and drive decisions in real-world applications. For example, a deployed fraud detection model can analyze transactions in real-time to prevent fraudulent activities. Key Steps in Model DeploymentPre-Deployment PreparationTo prepare for model deployment, it’s crucial to select a model that meets both performance and production requirements.

This entails ensuring that the model achieves the desired metrics, such as accuracy and speed, and is scalable, reliable, and maintainable in a production environment. Thorough evaluation and testing of the model using validation data are essential to address any issues before deployment. Additionally, setting up the production environment is key, including ensuring the availability of necessary hardware resources like CPUs and GPUs, installing required software dependencies, and configuring environment settings to match deployment requirements. Implementing security measures, monitoring tools, and backup procedures is also vital to protect the model and data, track performance, and recover from failures. Documentation of the setup and configuration is recommended for future reference and troubleshooting. Deployment StrategiesMainly we used to need to focus these strategies:

Shadow Deployment involves running the new model alongside the existing one without affecting production traffic. This allows for a comparison of their performances in a real-world setting. It helps to ensure that the new model meets the required performance metrics before fully deploying it. Canary Deployment is a strategy where the new model is gradually rolled out to a small subset of users, while the majority of users still use the existing model. This allows for monitoring the new model’s performance in a controlled environment before deploying it to all users. It helps to identify any issues or performance issues early on. A/B Testing involves deploying different versions of the model to different user groups and comparing their performance. This allows for evaluating which version performs better in terms of metrics such as accuracy, speed, and user satisfaction. It helps to make informed decisions about which model version to deploy for all users. Challenges in Model Deployment

To address scalability issues, ensure that the model architecture is designed to handle increased traffic and data volume. Consider using distributed computing techniques such as parallel processing and data partitioning, and use scalable infrastructure such as cloud services that can dynamically allocate resources based on demand. For meeting latency constraints, optimize the model for inference speed by using efficient algorithms and model architectures. Deploy the model on hardware accelerators like GPUs or TPUs and use caching and pre-computation techniques to reduce latency for frequently requested data. To manage model retraining and updating, implement an automated pipeline for continuous integration and continuous deployment (CI/CD). This pipeline should include processes for data collection, model retraining, evaluation, and deployment. Use version control for models and data to track changes and roll back updates if necessary. Monitor the performance of the updated model to ensure it meets the required metrics. Machine Learning System Architecture for Model DeploymentA typical machine learning system architecture for model deployment involves several key components. Firstly, the data pipeline is responsible for collecting, preprocessing, and transforming data for model input. Next, the model training component uses this data to train machine learning models, which are then evaluated and validated. Once a model is trained and ready for deployment, it is deployed using a serving infrastructure such as Kubernetes or TensorFlow Serving. The serving infrastructure manages the deployment of models, handling requests from clients and returning model predictions. Monitoring and logging components track the performance of deployed models, capturing metrics such as latency, throughput, and model accuracy. These metrics are used to continuously improve and optimize the deployed models. Additionally, a model management component manages the lifecycle of models, including versioning, rollback, and A/B testing. This allows for the seamless deployment of new models and the ability to compare the performance of different model versions. Overall, this architecture enables the efficient deployment and management of machine learning models in production, ensuring scalability, reliability, and performance. Tools and Platforms for Model DeploymentHere are some poplur tools for deployement:

Kubernetes is a container orchestration platform that manages containerized applications, ensuring scalability and reliability by automating the deployment, scaling, and management of containerized applications. Kubeflow is a machine learning toolkit built on top of Kubernetes that provides a set of tools for deploying, monitoring, and managing machine learning models in production. It simplifies the process of deploying and managing ML models on Kubernetes. MLflow is an open-source platform for managing the end-to-end machine learning lifecycle. It provides tools for tracking experiments, packaging code, and managing models, enabling reproducibility and collaboration in ML projects. TensorFlow Serving is a flexible and efficient serving system for deploying TensorFlow models in production. It allows for easy deployment of TensorFlow models as microservices, with support for serving multiple models simultaneously and scaling based on demand. Best Practices For Machine learning deployment

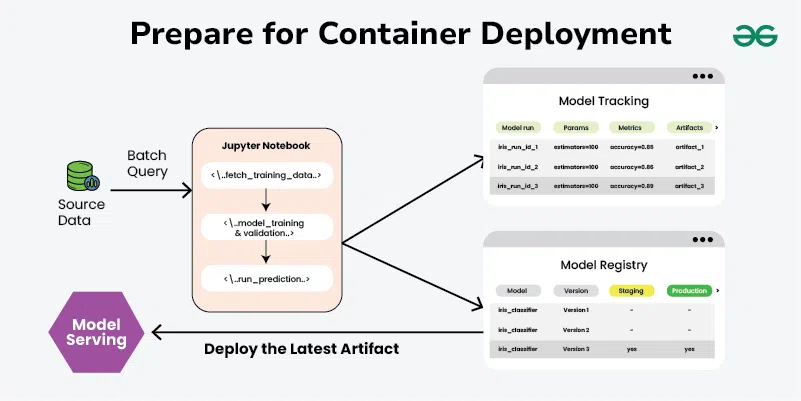

How to Deploy ML ModelsDevelop and Create a Model in a Training Environment:Build your model in an offline training environment using training data. ML teams often create multiple models, but only a few make it to deployment.  Develop and Create a Model Optimize and Test Code:Ensure that your code is of high quality and can be deployed. Clean and optimize the code as necessary, and test it thoroughly to ensure it functions correctly in a live environment.  Optimize and Test Code Prepare for Container Deployment:Containerize your model before deployment. Containers are predictable, repeatable, and easy to coordinate, making them ideal for deployment. They simplify deployment, scaling, modification, and updating of ML models.  Prepare for Container Deployment Plan for Continuous Monitoring and Maintenance:Implement processes for continuous monitoring, maintenance, and governance of your deployed model. Continuously monitor for issues such as data drift, inefficiencies, and bias. Regularly retrain the model with new data to keep it effective over time.  Plan for Continuous Monitoring and Maintenance ML Deployment projects:

ConclusionDeploying ML models into production environments is a multi-faceted process requiring careful planning, robust infrastructure, and continuous monitoring. By following best practices and leveraging the right tools, organizations can ensure their models provide reliable and valuable insights in real-world applications. Effective deployment transforms theoretical models into actionable tools that can drive business decisions and generate significant value. |

Reffered: https://www.geeksforgeeks.org

| AI ML DS |

Type: | Geek |

Category: | Coding |

Sub Category: | Tutorial |

Uploaded by: | Admin |

Views: | 13 |