|

|

Light Gradient Boosting Machine (LightGBM) is an open-source and distributed gradient boosting framework that was developed by Microsoft Corporation. Unlike other traditional machine learning models, LightGBM can efficiently large datasets and has optimized training processes. LightGBM can be employed in classification, regression, and also in ranking tasks. For these reasons, LightGBM became very popular among Data Scientists and Machine learning researchers. LightGBMLightGBM is a gradient-boosting ensemble technique based on decision trees. LightGBM can be used for both classification and regression, just like other decision tree-based techniques. LightGBM is designed for great performance in distributed systems.LightGBM builds decision trees that develop leaf-wise, which implies that given a condition, just one leaf is split, depending on the benefit. Sometimes, especially with smaller datasets, leaf-wise trees might overfit. Overfitting can be prevented by limiting the tree depth. Data is divided into bins by LightGBM’s histogram-based approach, which employs a distribution histogram to bucket the data. For iteration, gain calculation, and data splitting, the bins are employed rather than each data point. For a sparse dataset, this technique can also be enhanced. Exclusive feature bundling, a feature of LightGBM that allows the algorithm to bundle just the most advantageous characteristics in order to minimize dimensionality and speed up computation, is another element of the system. The gradient-based dataset in LightGBM is sampled using one-side sampling (GOSS). Data points with greater gradients are given more weight when computing gain by GOSS. Instances that have not been effectively used for training contribute more in this manner. To maintain accuracy, data points with smaller gradients are arbitrarily deleted while some are kept. Given the same sampling rate as random sampling, this approach is often superior. Strategies in LightGBMThe LightGBM gradient boosting framework uses a number of cutting-edge algorithms and techniques to accelerate training and enhance model performance. Here is a quick breakdown of a few of the main tactics employed by LightGBM:

Benefits of training a model using LightGBMThere are several advantages we can get if we use LightGBM to train a model which are discussed below:

Implementation to train a model using LightGBMInstalling modulesTo train a model using LightGBM we need to install it to our runtime. !pip install lightgbm Importing required librariesPython3

First we will import all required Python libraries like NumPy, Pandas, Seaborn, Matplotlib and SKlearn etc. Loading Dataset and data pre-processingPython3

This code loads the Breast Cancer dataset from Scikit-Learn, which consists of features X and labels Y, and uses train_test_split to divide it into training and testing sets (80% for training and 20% for testing). To guarantee the split’s reproducibility, the random_state parameter is set. Exploratory data analysisNow we will perform some EDA on the Iris dataset to understand it more deeply. Distribution of Target Classes Python3

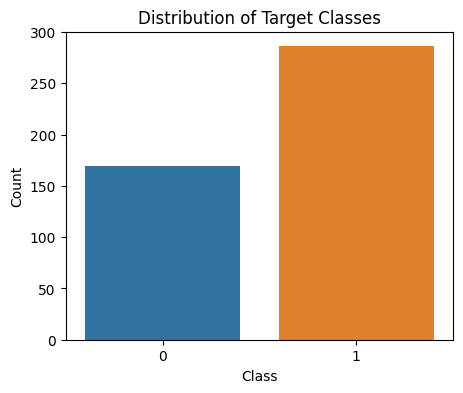

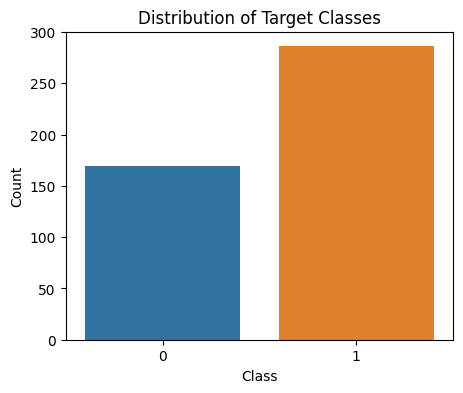

Output:  Target class distribution of SKlearn breast cancer dataset This will help us to understand the class distribution of of the target variable. Here our target variable has two classes that are Malignant and Benign. The bincount function in NumPy is used in this code to count the samples in each class of the training data. The distribution of the target classes is then depicted in a bar plot using Seaborn, with class labels on the x-axis and class counts on the y-axis. Correlation Matrix For plotting a correlation matrix , first of all we will be converting the data into dataframe as dataset being a 1-Dimensional and due to that correlation matrix cannot be plotted. Converting data to datafrome Python3

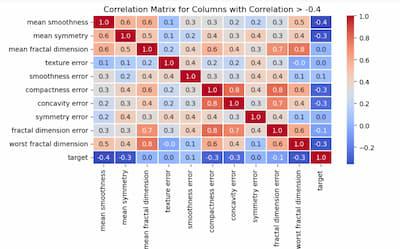

The code creates a correlation matrix for a pandas DataFrame df, finds columns that have a correlation higher than a given threshold with a “target” column, and then computes a correlation matrix for only those selected columns, effectively filtering for high-correlation relationships. Plotting Correlation Matrix Python3

Output:  Correlation Matrix A correlation matrix’s columns with a correlation with the ‘target’ column above a given threshold are initially identified by this code. In order to make it easier to study high-correlation associations with the “target,” it then constructs a subset DataFrame comprising only those chosen columns and generates a heatmap to illustrate the correlation matrix of those filtered columns. Creating LightGBM datasetTo train a model using LightGBM, we need to perform this extra step. The raw dataset can’t be feed directly to the LightGBM as it has its own dataset format which is very much different from traditional NumPy arrays or Pandas Data Frames. This special data format is used for optimized internal processes during training phase. Python3

The data are prepared for a LightGBM model’s training by this code. In order to guarantee consistent feature mapping throughout model assessment, it builds LightGBM datasets for both the training and testing sets, linking the testing dataset with the reference of the training dataset. Model trainingPython3

Output: [LightGBM] [Info] Number of positive: 286, number of negative: 169 Now we will train the Binary classification model using LightGBM. For this we need to define various hyperparameters of the LightGBM model which are listed below:

Model EvaluationNow we will evaluate our model based on model evaluation metrics like accuracy, precision, recall and F1-score. Python3

Output: Accuracy: 0.9561 This code initially uses the test data to create predictions using a LightGBM model (assumed to be stored in the bst variable). Then, using a threshold of 0.5, it turns these anticipated probabilities into binary predictions. It then assesses the model’s performance based on standard classification measures like accuracy, precision, recall, and F1-score and outputs the findings. Classification ReportPython3

Output: Classification Report: With the help of this code, a classification report for a test dataset’s predictions from a machine learning model is produced. Each class in the target variable is given a full overview of several classification metrics in the report, including precision, recall, F1-score, and support. ConclusionIn conclusion, using LightGBM to conduct binary classification tasks has shown to be a highly effective way to improve model performance. The 95.61% accuracy and remarkable 96.50% F1-score gained show how effective LightGBM is at enhancing model accuracy and precision. Despite the extraordinary nature of these results, it’s vital to keep in mind that accuracy may be significantly lower in real-world circumstances with larger datasets. In spite of this, the general trend shows that LightGBM can be a potent tool for improving model performance, making it a worthwhile option for a variety of machine learning applications, particularly when working with complicated and high-dimensional data. For large-scale applications where model accuracy and speed are essential components, its speed and efficiency make it particularly ideal. |

Reffered: https://www.geeksforgeeks.org

| AI ML DS |

Type: | Geek |

Category: | Coding |

Sub Category: | Tutorial |

Uploaded by: | Admin |

Views: | 13 |