|

|

Flink is an open-source stream processing framework developed by the Apache Software Foundation. It’s designed to process real-time data streams and batch data processing. Flink provides features like fault tolerance, high throughput, low-latency processing, and exactly-once processing semantics. It supports event time processing, which is crucial for handling out-of-order data in streaming applications. Flink is often used in various industries for tasks such as real-time analytics, fraud detection, monitoring, and more. Flink offers several benefits:

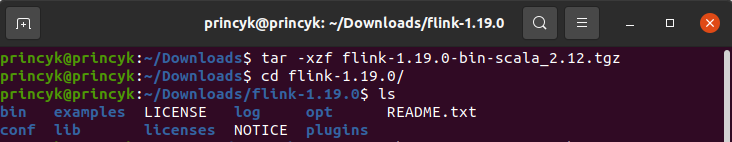

Flink Installation StepsFollow every step-by-step instruction to install Flink: Step 1: Java is one requirement for the run Flink, so, first check installation of Java is correct or not java -version  java if, correct install java then follow the nest step. Step 2: Download the Flink tar File in Flink original site  flink site Step 3: Then it downloaded tar file need to untar and reach the till flink tar file – as like cd Downloads/ tar -xzf flink-1.19.0-bin-scala_2.12.tgz and then move in this flink-1.19.0 directory cd flink-1.19.0/  untar flink Step 4: Now, Successfully install Flink , Then Check it proper work or not? First, Start the Flink local server ./bin/start-cluster.sh  Start Flink local server Step 5: Then submit the job as a jar file ./bin/flink run examples/streaming/WordCount.jar  submit job Step 6: Then put command tail log/flink-*-taskexecutor-*.out

we can see also Flink UI after server start on localhost:8081  Flink UI Step 7: Now, We can stop the Flink local server ./bin/stop-cluster.sh  Stop Flink local server Scenario 2: If need to run jar (Maven Project) in flink server. ./bin/flink run Test-1.0-SNAPSHOT.jar

Real-World Use Cases and ApplicationsReal-Time Analytics

Fraud Detection

Recommendation Systems

Batch Processing and ETL

Here’s an example Flink code that consumes data from Kafka, aggregates it, and produces the aggregated results back into Kafka: This example assumes you have Kafka running locally on localhost:9092, with input data stored in a topic named input_topic. It takes data from this Kafka topic, performs word count aggregation, and produces the aggregated results to another Kafka topic named output_topic. User may need to adjust the Kafka bootstrap server addresses and topic names according to your setup. ConclusionApache Flink is recognized as a strong platform for stream processing because of its quick response time, ability to handle failures, and support for both event-driven and batch processing. Its extensive API offerings and easy integration features make it a top pick for businesses in different sectors, providing immediate understanding and flexible solutions for handling large amounts of data. Whether it’s for in-the-moment analysis, identifying fraudulent activities, or managing intricate ETL processes, Flink’s features give developers and data engineers the tools to create robust and effective applications for stream processing. How to Install Flink – FAQsWhat is Flink’s primary advantage over other stream processing frameworks?

How does Flink handle out-of-order data in streaming applications?

What are the key steps to install Apache Flink?

What are some common real-world applications of Apache Flink?

|

Reffered: https://www.geeksforgeeks.org

| Installation Guide |

Type: | Geek |

Category: | Coding |

Sub Category: | Tutorial |

Uploaded by: | Admin |

Views: | 18 |